Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

On This Page

- What Is ATDD?

- What Are the Core Principles of ATDD?

- How Does the ATDD Process Work?

- Which Tools and Frameworks Are Used for ATDD?

- How Does ATDD Relate to TDD and BDD?

- How Is ATDD Being Applied in Real-World Projects in 2025?

- What Are Common Pitfalls in ATDD and How to Resolve Them?

- ATDD Best Practices to Improve Collaboration, Quality, and Automation

- The Future of ATDD in an AI-Driven Software Development

- Conclusion

- Citations

- Home

- /

- Learning Hub

- /

- What Is ATDD in Software Testing? A Complete Guide

What Is ATDD in Software Testing? A Complete Guide

Learn what ATDD is, how it works, key principles, tools, best practices, real-world use cases, and its future with AI.

Deboshree

January 11, 2026

Software projects often fail or face delays due to misunderstandings about requirements or differing expectations among stakeholders. Acceptance Test–Driven Development(ATDD) addresses this challenge by promoting collaboration early in the project, helping teams clarify what the software is supposed to achieve and reducing rework later.

Overview

What Is the Role of ATDD?

ATDD acts as a shared decision-making mechanism that turns business expectations into verifiable behaviors. It reduces guesswork by ensuring every feature is built and validated against agreed outcomes, not assumptions.

How Does ATDD Work?

ATDD aligns business expectations with development and testing through collaboration, executable acceptance tests, and continuous validation.

- Collaborate to Understand Requirements: Product owners, developers, and testers align early to clarify expectations, uncover ambiguities, and establish shared understanding before implementation begins.

- Define Acceptance Criteria: Clear, measurable conditions are documented in business-friendly language, ensuring everyone agrees on success before development work starts.

- Write Acceptance Tests: Executable acceptance tests are created from defined criteria, translating business expectations into verifiable behaviors the system must satisfy.

- Develop the Feature: Developers implement functionality guided by acceptance tests, ensuring code behavior directly reflects agreed business outcomes.

- Run Tests and Validate Behavior: Acceptance tests are executed continuously to confirm functionality meets expectations and provide fast feedback during development cycles.

- Refine and Iterate: Failures trigger collaborative discussion, refinements, and updates until all acceptance conditions are consistently satisfied.

- Maintain Living Documentation: Acceptance tests double as continuously updated documentation, preserving system behavior knowledge throughout the software lifecycle.

Which Tools Are Commonly Used in ATDD Workflows?

ATDD relies on tools that turn business expectations into executable tests while supporting collaboration, automation, and continuous validation. These frameworks help teams maintain shared understanding and ensure features meet agreed acceptance criteria across environments.

- TestMu AI: Executes acceptance tests across real browsers and operating systems, validating business scenarios at scale within continuous delivery pipelines.

- Cucumber: Enables teams to express acceptance criteria in readable Gherkin scenarios that execute automatically against application behavior.

- Reqnroll: Supports .NET teams by converting business-readable Gherkin specifications into automated acceptance tests within modern development environments.

- FitNesse: Provides a collaborative wiki interface where acceptance tests act as executable documentation aligned with evolving system requirements.

- Robot Framework: Uses keyword-driven automation to create readable, data-driven acceptance tests that integrate smoothly with continuous integration workflows.

- JBehave: Allows Java teams to define narrative-style acceptance scenarios that validate system behavior against agreed business stories.

- Gauge: Offers a lightweight framework for writing clear acceptance specifications that execute across multiple languages and CI pipelines.

- Concordion: Combines HTML documentation with automated acceptance checks, keeping specifications accurate and synchronized with application behavior.

How Does ATDD Work?

ATDD, TDD, and BDD are related practices that aim to build high-quality software by defining expectations early. Each approach differs in focus and level of abstraction within the software development process.

- ATDD vs TDD: Test-Driven Development (TDD) focuses on code-level correctness. Developers write unit tests before implementing code to ensure individual components work as expected. ATDD operates at a higher level, defining acceptance tests based on business requirements to describe what the system should do from a user perspective.

- ATDD vs BDD: Behavior-Driven Development (BDD) evolved from ATDD and emphasizes describing system behavior using a shared, business-readable language such as Gherkin. While BDD focuses on behavioral clarity, ATDD emphasizes validating acceptance criteria and business outcomes.

What Is ATDD?

ATDD is a software development approach used to clearly define what the system should do before coding starts. It focuses on writing acceptance tests based on business requirements so that developers, testers, and stakeholders share a common understanding of expected behavior.

This agile development approach can be viewed as a proactive form of User Acceptance Testing (UAT). While traditional UAT is typically performed at the end of development to verify that the software meets user needs, ATDD defines acceptance criteria upfront and uses them to guide development.

As a result, the software aligns with user and business expectations from the beginning, reducing misunderstandings and minimizing rework.

Note: Run UAT tests at scale across 3000+ browsers and OS combinations. Try TestMu AI Today!

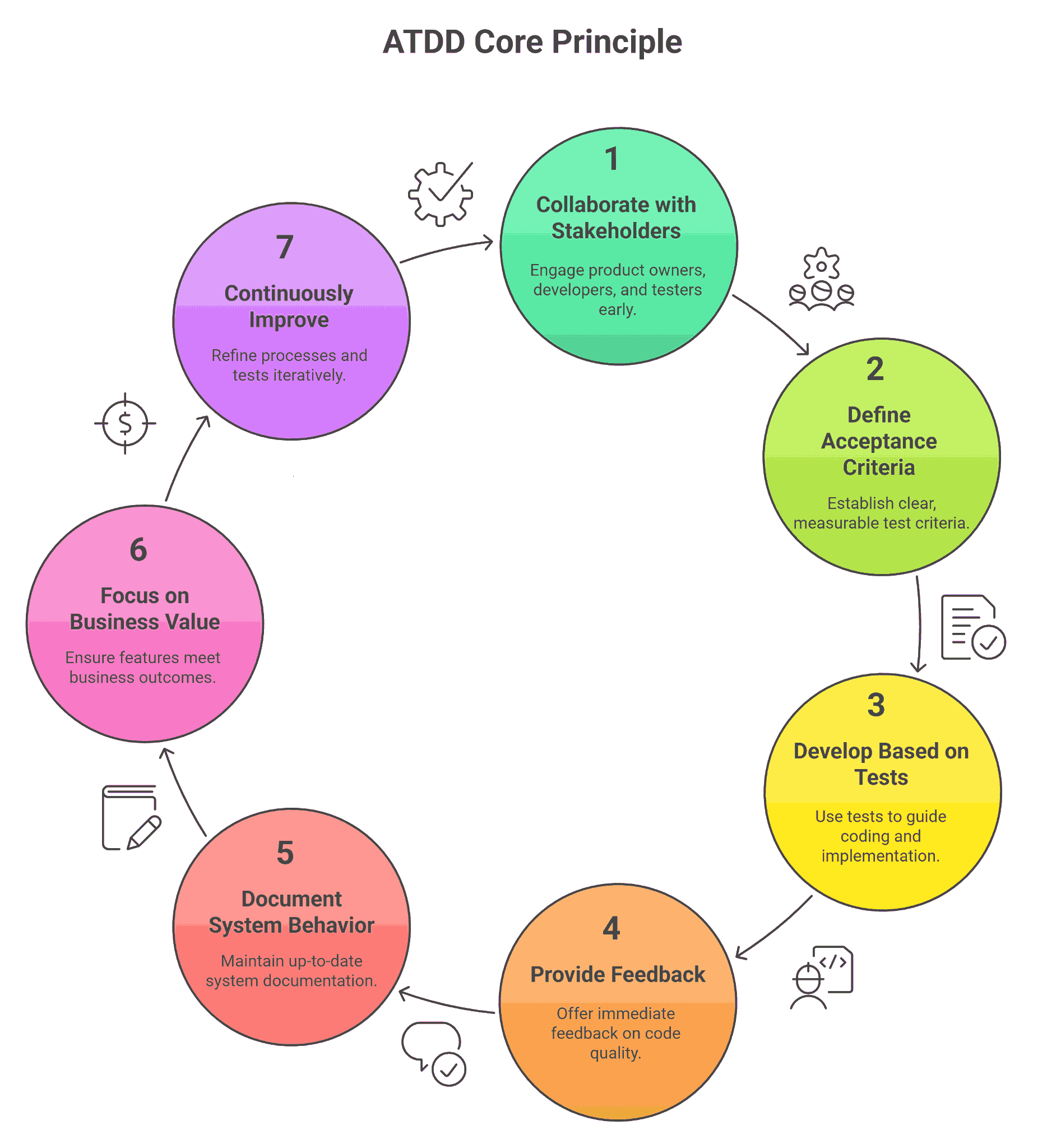

What Are the Core Principles of ATDD?

ATDD is built on a set of guiding principles that ensure software meets business requirements, improves collaboration, and maintains high quality. Understanding these core principles helps teams implement ATDD effectively and consistently.

Key principles:

- Collaboration Between Key Stakeholders: ATDD emphasizes early and continuous collaboration between product owners, developers, and testers, collectively known as the “Three Amigos.”

- Product Owner: The Product Owner represents the customer or business perspective, defining goals and expected outcomes.

- Developer: The Developer provides insight into technical feasibility and guides how the feature can be implemented.

- Tester/QA Engineer: The Tester/QA Engineer ensures that acceptance criteria are testable and verifies that the feature works as intended.

- Define Clear and Measurable Acceptance Criteria: Acceptance tests act as precise definitions of done. Writing criteria in plain, business-readable language ensures that tests reflect intended behavior rather than technical implementation. Tools like Gherkin help formalize these scenarios.

- Tests Drive Development: ATDD flips the traditional workflow by making acceptance tests the starting point for development. Developers use these tests to guide coding, ensuring that every feature meets the agreed-upon requirements before it is considered complete.

- Early and Continuous Feedback: Automated acceptance tests provide immediate feedback on whether new code meets business requirements. Integrating tests into CI/CD pipelines accelerates detection of defects, reduces rework, and improves overall quality.

- Living Documentation: Acceptance tests serve as living documentation of the system’s behavior. They remain current as requirements evolve, bridging the gap between business intent and technical implementation. This makes it easier for new team members and stakeholders to understand the system.

- Focus on Business Value: ATDD prioritizes validating intent over technical correctness. Tests ensure that features deliver the expected business outcomes, keeping development aligned with organizational goals.

- Continuous Improvement: ATDD is not a one-time process. Teams iteratively refine acceptance tests, scenarios, and workflows based on feedback, evolving requirements, and new insights to maintain relevance and effectiveness.

By discussing features and acceptance criteria together before development starts, the Three Amigos achieve a shared understanding of the system’s expected behavior, reducing misunderstandings and rework.

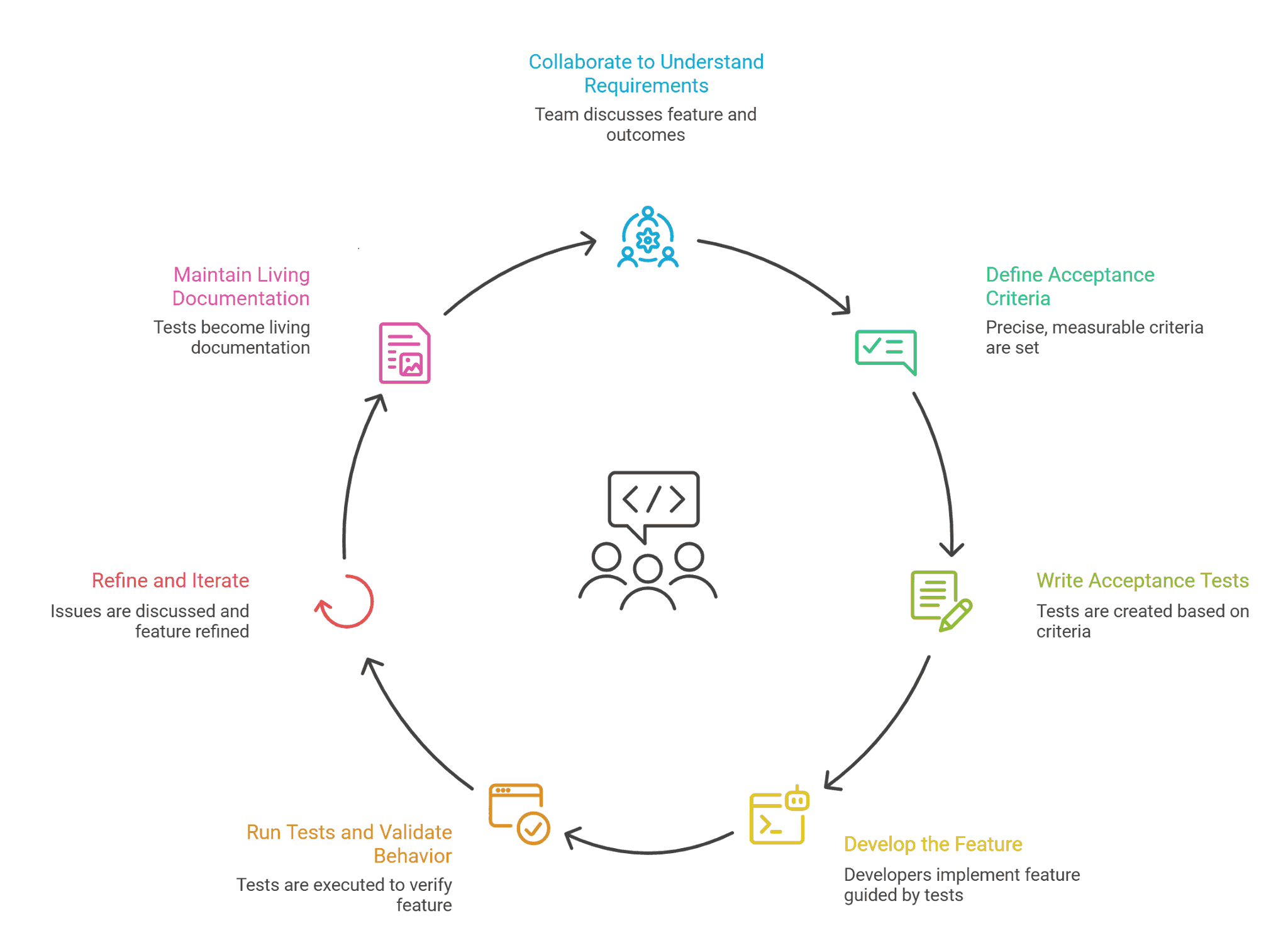

How Does the ATDD Process Work?

The ATDD process involves several key steps that guide teams from understanding requirements to delivering tested, high-quality software.

- Collaborate to Understand Requirements: The product owner, developers, and testers, the "Three Amigos”, meet to discuss the feature and its desired outcomes. This early collaboration ensures that everyone has a clear understanding of what the system should achieve. In modern AI-driven workflows, AI tools can assist by suggesting potential test scenarios or highlighting ambiguous requirements, but humans remain responsible for business intent.

- Define Acceptance Criteria: During collaboration, the team defines precise, measurable acceptance criteria in business-readable language. These criteria act as a formal definition of “done” for the feature. Tools like Gherkin can help structure scenarios so they are both readable for non-technical stakeholders and executable by automation frameworks.

- Write Acceptance Tests: Once the criteria are defined, the team writes acceptance tests based on them. These tests can be manually authored or generated using AI-assisted tools. The goal is to create executable tests that clearly validate the expected behavior of the system.

- Develop the Feature: Developers implement the feature guided by the acceptance tests. By focusing on passing these tests, they ensure that the code aligns with the business requirements. In AI-assisted environments, AI can help generate code or even suggest fixes, but the tests remain the authority on correct behavior.

- Run Tests and Validate Behavior: Acceptance tests are executed to verify that the feature meets the defined criteria. This step provides immediate feedback on whether the implementation aligns with business expectations. When integrated into CI/CD pipelines, automated acceptance tests accelerate feedback cycles. In addition, AI test automation can adapt to minor code changes through self-healing capabilities, ensuring tests remain stable without compromising behavioral validation.

- Refine and Iterate: If tests fail, the team discusses issues, refines the feature, and updates tests if necessary. This iterative process continues until all acceptance criteria are satisfied. Continuous improvement ensures that the system remains aligned with business goals, even as requirements evolve.

- Maintain Living Documentation: The acceptance tests themselves become living documentation. They describe how the system behaves, provide regression safety, and serve as an auditable record for stakeholders. This ensures that the knowledge captured in ATDD remains valuable throughout the Software Testing Life Cycle(STLC).

Which Tools and Frameworks Are Used for ATDD?

Acceptance Test–Driven Development requires tools that facilitate collaboration, test automation, and living documentation. These UAT testing tools allow teams to define acceptance criteria clearly, automate verification, and ensure that implemented features meet business expectations.

Key tools and frameworks include:

TestMu AI

TestMu AI is a cross-browser testing platform that allows you to execute automated acceptance tests at scale across 3000+ browsers and OS combinations. In ATDD workflows, TestMu AI ensures that executable acceptance criteria are validated not just functionally, but also across the environments users actually experience, strengthening confidence in business readiness.

How it supports ATDD:

- Acceptance Test Execution at Scale: Runs acceptance tests created with ATDD frameworks such as Cucumber, Robot Framework, Gauge, or Reqnroll across real-world browser and device combinations.

- Cross-Browser and Cross-Platform Validation: Ensures that features validated through acceptance criteria behave consistently across environments that users actually experience.

- Support for AI Test Automation: Complements ATDD workflows by supporting AI-driven test orchestration and stability, helping teams maintain reliable acceptance validation as applications evolve.

- CI/CD Integration: Seamlessly integrates with CI/CD pipelines to automatically execute acceptance tests on every build, providing fast and continuous feedback.

Cucumber

Cucumber is a widely used framework that allows writing plain-language acceptance tests executable across multiple platforms.

How it supports ATDD:

- Readable Scenarios: Tests written in Gherkin ensure both technical and non-technical stakeholders understand expected behavior.

- Executable Acceptance Tests: Automates validation of system behavior against business requirements.

- Collaboration: Encourages early team discussion and shared understanding of features.

- Integration with Automation Frameworks: Works with tools like Selenium or Appium for end-to-end validation.

To learn more, check out this Cucumber testing tutorial to see how the framework helps perform test automation and efficiently cover all boundary cases.

Reqnroll

Reqnroll is a modern .NET framework for writing Gherkin-style acceptance tests that integrate with current .NET projects.

How it supports ATDD:

- Readable Tests: Acceptance criteria are expressed in business language, bridging communication gaps.

- Executable Validation: Automates checking that features meet specified requirements.

- .NET Integration: Works seamlessly with existing .NET tools and frameworks.

- Collaboration: Supports shared understanding between developers, testers, and business stakeholders.

FitNesse

FitNesse is a wiki-based collaborative tool where teams can define acceptance tests and link them to the system under test.

How it supports ATDD:

- Collaborative Test Definition: Teams define acceptance tests together, reducing misunderstandings.

- Executable Tests: Tests serve as living, executable documentation of requirements.

- Early Feedback: Detects requirement issues before development begins.

- Integration: Connects with other test frameworks for automated execution.

Robot Framework

Robot Framework is a keyword-driven automation framework that supports testing across multiple platforms and technologies.

How it supports ATDD:

- Keyword-Driven Testing: Simplifies writing readable acceptance tests.

- Cross-Platform Automation: Validates expected behavior across different environments.

- Data-Driven Testing: Supports testing with various input data efficiently.

- Continuous Validation: Integrates with CI/CD for automated verification of features.

JBehave

JBehave is a Java framework for writing narrative-style executable scenarios as acceptance tests.

How it supports ATDD:

- Readable Scenarios: Stories written in narrative format make requirements clear.

- Executable Tests: Ensures features behave according to agreed business behavior.

- Collaboration: Facilitates shared understanding among developers, testers, and business stakeholders.

- Integration: Works with Java-based automation and testing tools for execution.

Gauge

Gauge is a lightweight, multi-language test automation framework designed for readable acceptance tests.

How it supports ATDD:

- Readable Tests: Scenarios are clear and understandable for all stakeholders.

- CI/CD Integration: Automates acceptance test execution during development pipelines.

- Multi-Language Support: Works across multiple programming languages and environments.

- Continuous Validation: Ensures features meet requirements throughout development.

Concordion

Concordion allows writing executable specifications in HTML that combine documentation and automated tests.

How it supports ATDD:

- Executable Documentation: Tests double as readable system behavior documentation.

- Automated Verification: Ensures features meet defined acceptance criteria.

- Shared Understanding: Keeps requirements clear for both technical and non-technical teams.

- Living Documentation: Always updated as the system evolves.

How Does ATDD Relate to TDD and BDD?

ATDD, TDD, and BDD are all test-first approaches, but they focus on different levels. Test-Driven Development (TDD) ensures code correctness, Behaviour-Driven Development (BDD) emphasizes system behavior in business-readable language, and ATDD focuses on meeting business or user acceptance criteria through collaboration.

Below is the core difference between TDD vs BDD vs ATDD to help you understand how they differ, even though all follow a test-first approach.

| Aspect | ATDD | TDD | BDD |

|---|---|---|---|

| Focus | Business requirements and acceptance | Code correctness and unit functionality | System behavior in business-readable terms |

| Test Level | Acceptance / end-to-end | Unit/component | Acceptance / functional |

| Primary Participants | Product owners, developers, testers | Developers | Developers, testers, business stakeholders |

| Test Written By | Collaborative team | Developers | Collaborative team |

| Language Style | Plain, business-readable | Technical | Business-readable (Gherkin, plain language) |

| Purpose | Ensure software meets business expectations | Ensure code works correctly | Ensure software behaves as expected |

| Definition of Done | All acceptance tests pass | All unit tests pass | All behavioral tests pass |

How Is ATDD Being Applied in Real-World Projects in 2025?

Acceptance Test-Driven Development continues to gain traction in modern software development processes, particularly as organizations seek faster delivery cycles, higher software quality, and stronger alignment between business goals and technical implementation.

In 2025, ATDD is being applied across web applications, mobile platforms, and complex enterprise systems, often enhanced with AI tools, large language models, and advanced test automation frameworks.

The following case studies highlight how ATDD is implemented in real-world scenarios this year.

ATDD With Large Language Models for Web Applications (2025)

In 2025, a research team conducted an industrial case study on Acceptance Test Generation with Large Language Models. The study examined how large language models (LLMs) can generate acceptance tests to support ATDD workflows in real-world projects.

The approach leveraged two AI-powered tools: AutoUAT, which generates natural language Gherkin acceptance scenarios from user stories, and Test Flow, which converts those scenarios into executable tests using Cypress.

When integrated into a partner company’s web application workflow, testers found 95% of the generated acceptance scenarios helpful, and 92% of the executable tests usable with minimal edits. Additionally, the generated scenarios uncovered previously overlooked test cases, demonstrating the effectiveness of AI-assisted ATDD in improving test quality and coverage.

AI-Powered ATDD for Mobile App Acceptance Testing (2025)

A 2025 industrial case study on Streamlining Acceptance Test Generation for Mobile Applications Through Large Language Models applied an AI-based framework named AToMIC to generate acceptance test artifacts for BMW’s MyBMW mobile application.

The system used specialized LLMs to automatically produce Gherkin test scenarios, Page Object models, and executable UI test scripts from requirements tracked in JIRA, significantly reducing manual effort.

Evaluations on over 170 screens showed that 93.3% of generated Gherkin test cases were syntactically correct, nearly 79% of Page Object models ran without edits, and 100% of the UI tests executed successfully. Practitioners reported large time savings and strong confidence in adopting AI-assisted acceptance test automation.

ATDD Adoption Using Robot Framework in Enterprise Testing (2025)

A 2025 academic research study on Applying Robot Framework for ATDD in Functional Testing of Enterprise Applications, analyzed the use of Robot Framework as an ATDD tool in enterprise functional testing.

The study highlighted how integrating automated acceptance testing with ATDD practices helped teams align on requirements early, increase test coverage, and support continuous integration cycles.

By leveraging Robot Framework’s keyword-driven test automation, teams were able to create comprehensive acceptance test suites that simulated real user scenarios for complex enterprise applications, improving overall software quality and reducing defect rates.

What Are Common Pitfalls in ATDD and How to Resolve Them?

ATDD improves collaboration, clarity, and quality, but teams can encounter challenges that reduce its effectiveness. Understanding common pitfalls and how to address them ensures ATDD delivers its full value.

- Lack of Early Collaboration

- Pitfall: Teams wait until development begins to define acceptance criteria, leading to misaligned expectations and rework. Resolution: Involve product owners, developers, and testers from the start. Hold structured ATDD workshops to define clear acceptance criteria and business scenarios before coding begins.

- Resolution: Involve product owners, developers, and testers from the start. Hold structured ATDD workshops to define clear acceptance criteria and business scenarios before coding begins.

- Ambiguous or Overly Technical Tests

- Pitfall: Tests written in technical language or with vague requirements create confusion and reduce communication value.

- Resolution: Write tests in plain, business-readable language using formats like Gherkin. Ensure scenarios capture intent rather than implementation details.

- Ignoring Test Maintenance

- Pitfall: Acceptance tests become outdated as requirements evolve, leading to false positives or irrelevant checks.

- Resolution: Treat tests as living specifications. Regularly review and update them to reflect business changes, especially in AI-driven systems or those using self-healing test automation.

- Overly Large or Complex Scenarios

- Pitfall: Tests that try to validate multiple behaviors at once are brittle, slow, and hard to understand.

- Resolution: Break down scenarios into small, focused, independent tests. This improves clarity, maintainability, and faster feedback.

- Poor Integration with CI/CD Pipelines

- Pitfall: Running acceptance tests manually or outside CI/CD reduces their effectiveness and slows feedback.

- Resolution: Automate test execution in continuous integration and delivery pipelines. Combine with AI testing tools to accelerate validation and improve reliability.

- Not Validating Business Intent

- Pitfall: Tests check technical outputs without confirming alignment with business goals.

- Resolution: Ensure every acceptance test reflects business objectives and intended outcomes. Use tests to validate intent, not just functional correctness.

- Underestimating Non-Technical Participation

- Pitfall: Limiting test creation to developers and testers reduces collaboration and shared understanding.

- Resolution: Empower product owners, analysts, and other stakeholders to contribute to acceptance tests, leveraging low-code/no-code platforms where needed.

- Over-Reliance on Tools Without Oversight

- Pitfall: Relying solely on AI testing tools, agent-to-agent testing, or self-healing-test-automation can miss critical business rules or ethical considerations.

- Resolution: Use AI tools to assist test creation and maintenance, but maintain human oversight for context, intent, and compliance. Ensure every automated scenario aligns with business and ethical requirements.

ATDD Best Practices to Improve Collaboration, Quality, and Automation

ATDD best practices focus on fostering early collaboration between product owners, developers, and testers to clearly define acceptance criteria. They emphasize writing business-readable, executable tests that align with user expectations, ensuring high-quality software. Additionally, integrating these practices with automation and CI/CD pipelines improves efficiency, test coverage, and continuous feedback.

- Collaborate Early and Continuously: Engage product owners, developers, and testers from the start. Use ATDD to ensure everyone shares a common understanding of requirements. In AI-driven environments, include discussions about AI behaviors, expected outputs, and limitations to avoid misaligned assumptions.

- Define Clear, Business-Focused Acceptance Criteria: Write acceptance tests in plain, business-readable language. This ensures tests reflect intent rather than implementation. Use formats like Gherkin to make them understandable for both technical and non-technical stakeholders.

- Automate Tests as Executable Specifications: Convert acceptance criteria into automated tests as early as possible. Treat them as living specifications that guide development and act as regression safeguards. In AI contexts, leverage AI testing tools to generate or maintain these executable tests efficiently.

- Keep Tests Small, Focused, and Independent: Design each test to validate a single behavior. Avoid large, complex scenarios that are hard to maintain or understand. Small, independent tests provide faster feedback and are easier to adapt in environments using self-healing test automation.

- Continuously Validate and Refactor: Update tests regularly to reflect evolving requirements. With AI-generated or AI-assisted code, continuously validate that tests still capture the intended business behavior and adjust as necessary.

- Integrate ATDD into CI/CD Pipelines: Automate the execution of acceptance tests in continuous integration and delivery pipelines. This ensures rapid feedback whenever new code or AI-generated code is introduced, preventing regressions and maintaining alignment with business intent.

- Use Tests as Living Documentation: Treat acceptance tests as the authoritative source of system behavior. They should explain how the system behaves, guide new team members, and serve as an auditable record for compliance and stakeholders.

- Encourage Collaboration Between Humans and AI: In modern ATDD, AI can assist with generating tests, suggesting scenarios, or performing agent-to-agent testing. Ensure humans review AI-suggested tests to validate business intent, ethical considerations, and regulatory compliance.

- Focus on Intent, Not Just Outcomes: Acceptance tests should validate that the system does the right thing, not only that it works technically. This mindset ensures alignment with business goals and helps manage risk in AI-driven or self-healing systems.

- Make ATDD Accessible to Non-Technical Stakeholders: Leverage low-code/no-code platforms and AI testing tools to empower product owners and analysts to contribute directly to test creation. This increases collaboration, reduces bottlenecks, and improves overall software quality.

The Future of ATDD in an AI-Driven Software Development

As AI systems increasingly generate production-ready code, Acceptance Test-Driven Development becomes a critical control mechanism for AI-driven software delivery. AI automation excels at producing implementations quickly, but it does not inherently understand business intent. ATDD defines what must work and provides a behavioral boundary within which AI operates.

Acceptance tests function as behavioral contracts that AI-generated code must satisfy. In environments where autonomous agents write, modify, and deploy code, ATDD plays a central role in AI testing by validating system behavior rather than implementation details. This is especially important as agent-to-agent testing becomes common, where one AI agent produces code and another evaluates it against acceptance criteria.

AI-Assisted Creation and Evolution of Acceptance Tests

Generative AI and modern AI testing tools will increasingly support teams in creating, evolving, and maintaining acceptance tests. Business requirements, user stories, and conversations can be converted into executable scenarios that reflect real business behavior. AI automation assists in identifying missing edge cases, generating negative scenarios, and refining acceptance tests as requirements change.

AI testing tools help keep acceptance tests aligned with domain language and business intent. AI self-healing of code is complemented by AI-assisted test maintenance, reducing brittle test failures caused by implementation changes. This significantly reduces boilerplate effort and allows teams to focus less on writing tests and more on validating intent, value, and risk.

From Three Amigos to Three Amigos Plus AI

The traditional ATDD collaboration model of business, development, and testing remains essential in the future. What changes is the addition of AI as a collaborative enabler. AI participates as a facilitator that supports requirement clarification, scenario generation, and impact analysis, while humans remain responsible for decisions.

In this expanded model, AI supports agent-to-agent testing by helping simulate interactions between autonomous components and validating expected outcomes. However, ownership of business intent, risk decisions, and ethical or regulatory constraints remains firmly human. AI augments collaboration and accelerates alignment, but shared understanding continues to be a human responsibility.

Executable Specifications as the Primary Source of Truth

ATDD will increasingly replace traditional documentation with executable specifications that drive AI testing strategies. Acceptance tests become living documentation that continuously validates system behavior. They also serve as regression safety nets and auditable compliance artifacts in highly automated delivery environments.

Written in natural language, these executable specifications bridge business language, technical implementation, and AI-generated code. In systems that leverage AI self-healing of code, acceptance tests define the boundaries within which automated changes are allowed. Over time, executable specifications become the single authoritative definition of system behavior.

Deeper CI/CD and Autonomous Delivery Pipelines

ATDD will sit at the core of autonomous and semi-autonomous delivery pipelines powered by AI automation. In these pipelines, AI agents propose code changes, other agents validate them through agent-to-agent testing, and acceptance tests confirm behavioral correctness. Any change that violates acceptance criteria is automatically rejected.

This approach enables faster feedback loops while maintaining confidence and control. Future pipelines rely heavily on AI testing tools and are policy-driven, test-first, and behavior-focused. The emphasis shifts from how code is written to how systems behave under real business scenarios.

Low-Code and No-Code Acceptance Testing at Scale

With advances in AI testing tools and low-code platforms, acceptance testing becomes accessible to a broader audience. Product owners and business analysts can define acceptance criteria directly and validate system behavior without deep technical knowledge. AI automation translates business intent into executable acceptance tests, enabling faster and more reliable testing.

This democratization of ATDD expands ownership of quality beyond engineering teams. As self-healing test automation becomes more prevalent, acceptance tests automatically adapt to changes in the system while ensuring that automated corrections remain aligned with business expectations rather than drifting toward purely technical optimizations.

ATDD for Complex Systems, AI, Data, and Ethics

As systems incorporate machine learning models, decision engines, and adaptive behavior, ATDD evolves to address complexity. Acceptance tests begin to validate non-deterministic behavior, enforce model boundaries, and define constraints related to fairness, bias, and explainability.

For example, an acceptance test might state that a loan decision must include a clear explanation referencing multiple contributing factors. In this context, ATDD becomes a key component of AI testing strategies that focus on responsible and ethical AI. It ensures that AI self-healing mechanisms and autonomous agents operate within clearly defined behavioral limits.

From Testing Outcomes to Validating Intent

Traditional testing focuses on validating outputs. The future of ATDD emphasizes validating intent in AI-driven systems. Teams use acceptance tests to confirm that systems behave according to business goals, that AI interprets requirements correctly, and that rapid, automated change still respects domain rules.

This represents a fundamental mindset shift. ATDD evolves from asking whether something works to ensuring it does the right thing, even as AI automation accelerates delivery.

Conclusion

A quality strategy is what keeps a business operating efficiently and delivering consistently good results. Understanding why it matters helps everyone see how quality connects to the bigger picture, and focusing on the key components ensures nothing important is missed.

Putting the strategy into action requires planning and teamwork, while keeping a clear, up-to-date document makes it easy to follow and adjust when needed. By following best practices, a company can maintain high standards while continuing to improve and adapt over time.

Citations

- Enhancing Acceptance Test-Driven Development Model with Combinatorial Logic: https://www.researchgate.net/publication/346661514

- Acceptance Test-Driven Development: : https://jyx.jyu.fi/jyx/Record/jyx_123456789_37392

Frequently asked questions

Did you find this page helpful?

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests