Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

On This Page

- What is Machine Learning and AI?

- Why Machine Learning in Software Testing?

- Uses of Machine Learning in Software Testing?

- Predictive Analysis in Software Testing

- How Does Machine Learning Produce Automated Tests?

- Power of AI With TestMu AI

- Challenges While Using Machine Learning in Software Testing

- Best Practices

- Conclusion

Machine Learning In Software Testing

This blog covers the concept of machine learning in software testing along with its uses, challenges, and best practices.

Hari Sapna Nair

January 13, 2026

We can clearly see how Machine Learning (ML) and Artificial Intelligence (AI) are becoming seamlessly integrated into our daily lives, from helping with email composition to summarising articles and handling programming tasks. Based on a February 2024 article from Semrush, the estimated yearly growth rate for artificial intelligence is 33.2% between 2020 and 2027.

This pioneering trend is also being recognized by the software testing field, which is using AI and ML tools to update outdated tests, handle an array of test case scenarios, and increase test coverage. Organizations can increase software testing efficiency and save time by utilizing machine learning in software testing.

We will look at the depths of machine learning in software testing along with its uses, challenges, and best practices in this blog.

What is Machine Learning and AI?

Machine Learning(ML) and Artificial Intelligence(AI) are two closely related concepts in the field of computer science. AI focuses on creating intelligent machines capable of doing tasks that typically require human intelligence like visual perception, speech recognition, decision-making, etc. It involves developing algorithms that can reason, learn, and make decisions based on input data given to the machine.

On the other hand, ML is a subset of AI that involves teaching machines to learn from data without being explicitly programmed. ML algorithms identify patterns and trends in data, enabling them to make predictions and decisions autonomously.

Why Machine Learning in Software Testing?

The process of automated software testing involves writing and running test scripts, usually with the use of frameworks like Selenium. Element selectors and actions are combined by Selenium to simulate user interactions with the user interface(UI). To facilitate operations like clicking, hovering, text input, and element validation, element selectors assist in identifying UI elements. Although it requires minimum manual efforts, there is a need for consistent monitoring due to software updates.

Note: Automate your tests on a Selenium based cloud Grid of 3000+ real browsers and devices. Try TestMu AI Now!

Imagine a scenario where the “sign up” button of a business page is shifted to a different location on the same page that says “register now!”. Even for such a small change, the test script has to be rewritten with appropriate selectors. There are many such test case scenarios that require consistent monitoring.

Machine learning addresses such challenges by automating test case generation, error detection, and code scope improvement, enhancing productivity and quality for enterprises.

Moreover, the use of machine learning in software testing leads to significant improvements in efficiency, reliability, and scalability. Automation testing tools powered by ML models can execute tests faster, reducing the time and effort.

Check out the key distinctions between manual and automated software testing outlined in our blog on Manual Testing vs Automation Testing.

Uses of Machine Learning in Software Testing?

Machine learning is revolutionizing software testing by providing various methods like predictive analysis, intelligent test generation, etc. These methods help us to optimize the testing process, reduce cost, and enhance the software quality.

Let us discuss the various methods by which we can use machine learning in software testing.

- Predictive Analysis

By machine learning algorithms we can predict potential software problem areas by analyzing historical test data. This proactive approach helps testers to anticipate and address vulnerabilities in advance, thereby enhancing overall software quality and reducing downtime.

- Intelligent Test Case Generation Machine learning-driven testing tools automatically generate and prioritize test cases based on user interactions, ensuring comprehensive coverage of critical paths. This reduces manual effort while guaranteeing robust software applications.

- Testing Machine Learning helps us to automate various types of tests like API testing by analyzing API responses for anomalies, unit testing by generating unit test cases based on code analysis, integration testing by identifying integration dependencies for test scenario generation, performance testing by simulating various scenarios, etc. This helps to enhance test coverage and efficiency while improving software reliability.

Unlock the potential of Cypress API testing with our comprehensive blog:A Step-By-Step Guide To Cypress API Testing.

- Visual Validation Testing Machine learning facilitates thorough comparison of images/screens across various browsers and devices, detecting even minor UI discrepancies. This ensures a consistent user experience across platforms and improves customer satisfaction.

To explore the top Java testing frameworks of 2024 dive into our comprehensive blog: 13 Best Java Testing Frameworks For 2024.

- Adaptive Continuous Testing In CI/CD environments, machine learning algorithms dynamically adapt and prioritize tests based on code changes, providing instant validation for recent alterations and ensuring continuous software quality.

- Test coverage analysis After even a minor change in the application, it is essential to conduct tests to ensure the proper functionality. However, running the entire test suite can be impractical, though often necessary. In this scenario machine learning enables the identification of required tests and optimizes time usage. Furthermore, it also enhances the overall testing effectiveness by facilitating analysis of current test coverage and highlighting low-coverage and at-risk areas.

- Natural Language Processing(NLP) in Test Machine learning-powered testing tools equipped with NLP capabilities comprehend test requirements expressed in plain language and enable non-technical stakeholders to contribute to test scenario drafting, thereby enhancing collaboration and efficiency across teams.

- Classification of executed tests Test automation tools expedite test execution and provide rapid feedback on failed tests, but diagnosing multiple failures can be time-consuming. Machine learning technology addresses this by categorizing tests, automatically identifying probable causes of bugs, and offering insights into prevalent failures and their root causes.

- Automated test writing by spidering Machine learning is commonly used for automated test creation. Initially, the technology scans the product, gathering functionality data and downloading HTML codes of all pages while assessing loading times. This process forms a dataset, which serves to train the algorithm on the expected behavior of the application. Subsequently, machine learning technology compares the current application state with its templates, flagging any deviations as potential issues.

- Robotic Process Automation(RPA) for Regression Testing RPA helps in regression testing by automating repetitive tasks, such as data entry and test case execution, thereby streamlining the process and saving time and resources. For instance, RPA can seamlessly manage test suite re-execution post-software updates by integrating with version control systems (VCS), fetching the latest version, deploying it, executing tests, and validating results.

Predictive Analysis in Software Testing

Imagine a member of a QA team is given a task to ensure the quality of a complex web application that undergoes frequent updates and feature additions. In this scenario, the traditional test automation methods will be inadequate and time-consuming.

To solve this issue, we can use machine learning algorithms to conduct predictive analysis on test data. This process involves using historical test results to forecast potential software issues before they manifest. By identifying patterns and trends, the machine learning model identifies sections of the application more susceptible to bugs or failures.

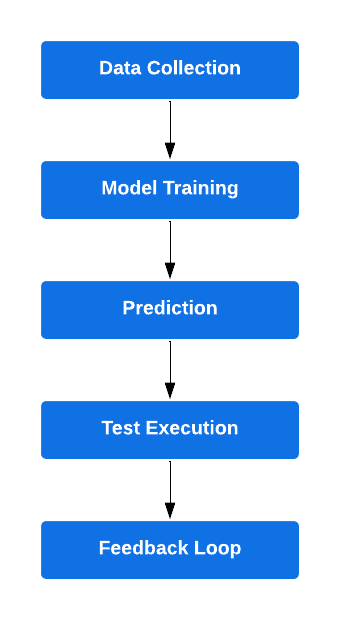

The steps used to implement the above-mentioned solution are as follows:-

- Data Collection: Gather comprehensive historical test data consisting of test cases, outcomes, and application modifications.

- Model Training: Utilize this data to train a machine learning model, enabling it to recognize patterns associated with failures or bugs.

- Prediction: Once trained, employ the model to analyze new application changes or features. The model will now forecast potential problem areas or recommend specific tests likely to encounter issues.

- Test Execution: Direct testing efforts towards the predicted areas along with the standard test suite.

- Feedback Loop: Continuously incorporate test results into the model to refine its accuracy over time, ensuring ongoing improvement in predictive capabilities.

How Does Machine Learning Produce Automated Tests?

Automated test case generation is a transformative process that involves the identification, creation, and execution of tests with minimal human intervention. Through the utilization of machine learning, new test cases can be generated or code defects can be detected by training models on existing test cases or code bases to uncover underlying trends.

The steps to generate automated tests cases using machine learning are as follows:-

- Data collection: In the data collection phase, an extensive dataset comprising test cases or code samples, along with their pass/fail outcomes, is compiled. This dataset serves as training data for the machine learning model, enabling it to learn patterns and correlations essential for effective automated testing.

- Data Training: Machine learning models are trained using historical testing data, which includes information about test cases, application behavior, and outcomes. During this phase, the machine learning algorithms learn patterns and relationships within the data to understand what constitutes a successful test and what indicates a failure.

- Feature Extraction: Machine learning algorithms extract relevant features or characteristics from the application code and testing data. These features could include UI elements, code syntax, user interactions, and performance metrics.

- Model Building: Using the extracted features, machine learning models are built to predict the outcome of new test scenarios. These models can take various forms, such as classification models (to predict pass/fail outcomes) or regression models (to predict numerical values, such as response times).

- Pattern Recognition: During pattern recognition, machine learning models analyze input features and their associated outputs to uncover correlations and dependencies within the dataset. By identifying these patterns, the model gains insights into the relationships between test case components and the probability of pass or fail outcomes, facilitating accurate predictions in automated testing scenarios.

- Test Case Generation: Once the machine learning models are trained and validated, they can be used to generate new test cases automatically. This process involves analyzing the application code, identifying areas that require testing, and generating test scenarios based on the learned patterns and relationships.

- Execution and Validation: The generated test cases are executed against the application, and the results are validated against expected outcomes. Machine learning algorithms also analyses the test results to identify patterns of failure or areas of improvement, which can inform future test generation iterations.

- Feedback Loop: As new testing data becomes available, the machine models are updated and refined based on the feedback. This iterative process ensures that the automated tests continue to adapt and improve over time, leading to more accurate and effective testing outcomes.

Power of AI With TestMu AI

TestMu AI is an AI-native test orchestration and execution platform that lets you run manual and automated tests at scale with over 10,000+ real devices, 3000+ browsers and OS combinations.

It offers KaneAI is a generative AI ML testing agent that allows users to create, debug, and evolve tests using natural language. Built specifically for high-speed quality engineering teams, it allows you to create and evolve complex tests through natural language, drastically reducing the time and expertise needed to start test automation.

Features:

- Intelligent Test Generation: Effortless test creation and evolution through Natural Language (NLP) based instructions.

- Intelligent Test Planner: Automatically generate and automate test steps using high-level objectives.

- Multi-Language Code Export: Convert your automated tests in all major languages and frameworks.

- Sophisticated Testing Capabilities: Express sophisticated conditionals and assertions in natural language.

- API Testing Support: Effortlessly test backends and achieve comprehensive coverage by complementing existing UI tests.

- Increased Device Coverage: Execute your generated tests across 3000+ browsers, OS, and device combinations.

Challenges While Using Machine Learning in Software Testing

Using machine learning for test automation offers immense potential for improving efficiency and effectiveness. However, it also presents several challenges that we must address to leverage its benefits successfully. The challenges while using machine learning for test automation are as follows:-

- Data Availability and Quality

- Complexity

- Overfitting

- Maintenance

- Integration

- Explainability

- Bias

- Adaptability to Application Changes

- Accuracy Verification

Machine learning algorithms require substantial amounts of high-quality data to train effectively. Insufficient or poor-quality data can hinder the accuracy and reliability of machine learning models, impacting the effectiveness of test automation efforts.

Machine learning models can be inherently complex, making them challenging to understand and debug. This complexity can pose difficulties in interpreting model behavior and diagnosing issues, especially in the context of automation testing.

Overfitting occurs when a model performs well on training data but fails to generalize to new data. This can occur due to model complexity or insufficient training data, leading to inaccuracies in test automation predictions.

Machine learning models require regular retraining and updating to remain effective, particularly as the system under test evolves. And this continuous maintenance and monitoring can be time-consuming and resource-intensive.

Integrating machine learning models into existing test automation frameworks can be challenging, requiring significant development effort and compatibility considerations.

Some machine learning models may lack explainability, making it challenging to understand the reasoning behind their predictions. This opacity can pose challenges in interpreting and trusting the results of machine learning-based test automation.

Biases in data or preprocessing can lead to inaccurate results and flawed predictions in machine learning-based test automation. Identifying and mitigating these biases is essential to ensure the reliability and fairness of automated testing outcomes.

Machine learning models must adapt to changes in the application under test to maintain relevance and effectiveness. However, accommodating frequent changes during development can be challenging, requiring proactive strategies to update and retrain models accordingly.

Ensuring the accuracy and reliability of machine learning algorithms in test automation requires rigorous validation and verification processes. Involving domain experts to assess model accuracy and refine algorithms is crucial for maximizing the efficiency and efficacy of machine learning-based testing.

Best Practices

By following some of the best practices, we can ensure that our products meet high-quality standards, fulfill customer expectations, and maintain a competitive edge in the market. Here are some refined best practices for testers:

- Embrace Simulation and Emulation

- Harness Automated Test Scripts

- Adopt Automation Frameworks

- Develop Comprehensive Test Data Sets Testers must craft comprehensive test data sets covering critical scenarios, ensuring realism and comprehensiveness. These data sets will help us with thorough testing and enable testers to detect and address problems at an early stage, thereby enhancing product quality.

- Monitor and Analyze Results

Leverage simulation and emulation tools to scrutinize software and systems within controlled environments. By simulating diverse scenarios, testers can validate their software’s resilience and rectify any anomalies prior to product release. LambdaTest simplifies app testing by offering automated testing on Emulators and Simulators, eliminating the need for an expensive device lab. To learn more about it, check out our blog on App Automation on Emulators and Simulators.

Deploy automated test scripts to streamline repetitive testing tasks, ensuring time savings and minimizing errors. It is important to develop these scripts as early as possible to ensure that they cover all critical features and aspects of the product, including functionality, performance, and security.

Implement automation frameworks like Testim, Functionize, Applitools, Mabl, Leapwork, etc to organize and manage automated tests efficiently. These frameworks will provide a structured approach to automation, aligning with industry best practices and enabling testers to optimize their testing processes.

Post-testing, monitor and analyze results meticulously to uncover issues and areas for improvement. By utilizing these analytics tools we can find patterns and trends, empowering testers to refine the product.

Conclusion

To summarize, machine learning is transforming software testing! It analyzes historical data to predict outcomes, enabling faster and more accurate test case generation. However, various challenges such as data quality, complexity, and integration are associated with the use of machine learning in automation testing. And to address these issues we can adopt rigorous validation processes and the adoption of best practices like using emulators and machine learning-driven automation tools.

In this blog we had an in-depth exploration of machine learning for automation testing, covering its importance, uses, and examples like predictive analysis. It also reviewed the top tools such as TestMu AI and discussed challenges, best practices, and future prospects, offering a comprehensive understanding of the role of machine learning in automation testing.

Frequently asked questions

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests