Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

On This Page

- A Quick AI Experiment

- The Current Reality of AI in Testing

- AI’s Testing Limitations: The QA Professional’s Perspective

- AI’s Black Box Problem: How Much Trust Can We Put in AI?

- Who Is Accountable to Bugs?

- Beyond the Limitations: What Can AI Do?

- The Human Advantage: Areas Where QA Professionals Remain Essential

- Cultural and Accessibility Testing

- Wrapping Up

- Results from my comparison of ChatGPT, CoPilot & Claude

The Testing Evolution Series, Part 1 – Where AI Falls Short and Humans Excel

Discover the current limitations of AI in software testing and why human QA professionals remain essential for strategic decision-making, exploratory testing, and quality assurance.

Ilam Padmanabhan

January 11, 2026

Every week, a new AI tool promises to revolutionize software testing. Teams everywhere are experimenting with AI-powered test generation tools, self-healing automation, and intelligent test analytics.

Amidst this whirlwind of change, QA professionals worldwide ask one crucial question: What does this mean for us?

This series aims to cut through the hype and explore AI’s real impact on software testing. We’ll examine how AI upgrades our tools and redefines roles, required skills, and career paths. Topics we’ll cover:

- Technical focus: What AI can and cannot do in software testing

- Career development: The evolving skills and new career paths in AI QA

- Human element: How to adapt to the emotional and practical realities of change

This discussion will be about staying relevant in a rapidly evolving landscape.

Note on terminology: I’ve grouped Large Language Models, Machine Learning, and Natural Language Processing under the broad term “AI.” While this is a simplification, it helps us focus on practical implications rather than technical nuances.

Note on predictions: This blog is driven by some facts and a lot of opinions (from my firsthand experience and also from other industry experts I follow on various platforms). From my broad understanding (& listening to too many podcasts) of where AI is headed, most experts can agree on what the immediate future brings. But almost all of them openly admit the medium to long-term future with AI is impossible to predict with reasonable accuracy. So the ‘future’ I cover in this blog refers to the next few months to a maximum of a year or two at best. Beyond that—only time (& AI) can tell!

A Quick AI Experiment

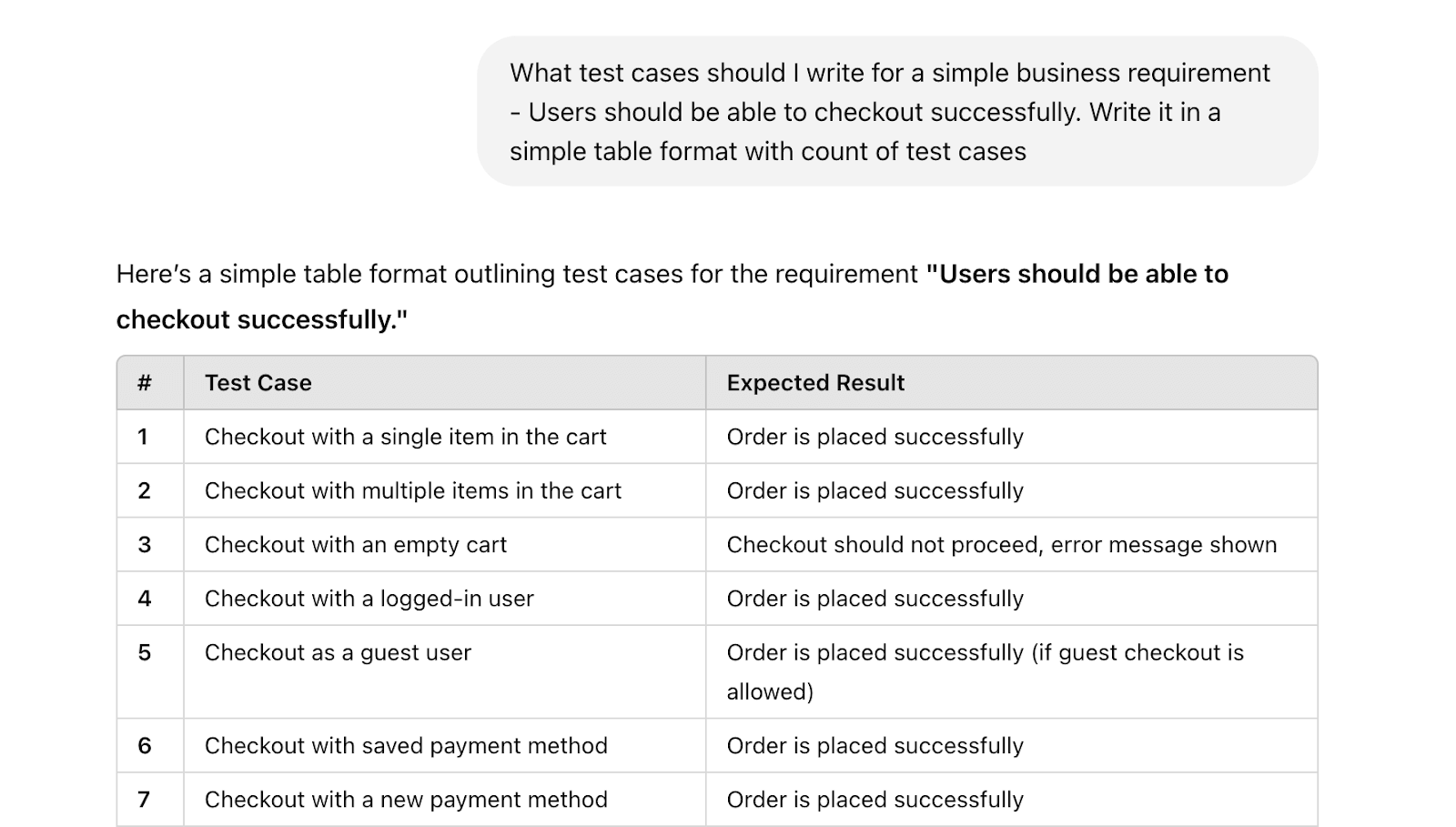

Before we dive in, I encourage you to run a fun activity (especially enjoyable for QA professionals): Take any decent prompt about a business scenario and run it on multiple AI tools. Notice the similarities and differences in their responses. While you may not understand the inner workings of each AI model, you’ll start developing intuition about what to expect from them.

I ran this experiment using Claude (from Anthropic), ChatGPT (from OpenAI) & Copilot (from Microsoft) with the following prompt:

Guess how many test cases each AI tool generated and what were their focus areas. I’ll reveal the answers at the end of this blog!

The Current Reality of AI in Testing

To map out the future of QA roles (survey report linked), we need to understand both the capabilities and limitations of AI. This shapes our direction in two key ways: using AI to boost human productivity and applying uniquely human skills to the gaps AI can’t fill.

The limitations I discuss below should be timestamped to early 2025. In the world of AI, today’s limitations could be tomorrow’s solved problems – or they could reveal even more complex challenges we haven’t anticipated.

That’s precisely what makes human expertise in QA more valuable than ever. AI tools won’t make our roles disappear—they’ll transform them, requiring us to develop new skills and perspectives while building on our core testing knowledge.

I’ll use an example of a simple ecommerce solution to illustrate my thinking throughout this series.

AI’s Testing Limitations: The QA Professional’s Perspective

Now that all my disclaimers are out of the way, let’s dive into the limitations of current AI technologies from a QA professional’s perspective.

1. Understanding Requirements (and the Nuances)

AI is good at reading text inputs and can gobble up your entire backlog in a second. They read extensively and rapidly, but herein lies the challenge: they read the lines, read between the lines, and make interpretations.

The problem is that they read too much when they don’t need to, and too little when they do need to. Consider a simple requirement: “Users should be able to checkout successfully.”

AI can parse this basic statement but might struggle with implicit business rules. For example, it might miss that:

- Users should be able to apply discount codes after viewing shipping costs

- Certain product combinations need special handling

- “Free shipping on orders over $50” excludes gift cards or varies by region

These subtle nuances require human expertise to translate into comprehensive test coverage. As QA professionals improve their prompt engineering skills, this gap may narrow—but more on that later in the series.

2. Test Case Generation Challenges

There are quite a few challenges when generating test cases.

Complex State Interactions

When testing a checkout flow, AI performs admirably with basic scenarios but struggles with complex state interactions.

It can test individual states like “cart updated” or “payment processed” but often misses real-world combinations such as:

- The user updates the cart while the payment is processing

- Inventory drops below the threshold during checkout

- The saved payment method expires during the transaction

- Shipping rates change during the finalization

- Promotional code expires during checkout completion

These state-dependent edge cases frequently occur in production but are often absent from AI-generated test suites. This limitation isn’t insurmountable, but it requires humans to develop skills to make AI perform the job correctly.

The “AI Being AI” Problem

AI engines are creative, sometimes to a fault. This manifests in several ways:

- Volume overload: AI might generate hundreds of test cases, many with minor variations, adding little value. For example, creating dozens of cart combinations (1 item, 2 items, 10 items) without knowing which scenarios are business-critical.

- Lack of repeatability: Running the same prompt twice will likely generate completely (or subtly) different sets of test cases. One run might focus on payment scenarios, another on shipping calculations—making it impossible to maintain a consistent test strategy over time (especially if the AI models do not have a very large context window).

- Prioritization blindness: AI can’t inherently prioritize test cases. It might give equal weight to testing a rare edge case (applying multiple discount codes while changing shipping address) and a critical path (standard checkout with a saved credit card). Without understanding business priorities, AI can’t focus testing on what matters most.

In our ecommerce example, while AI can generate many checkout scenarios, it can’t:

- Reproduce the same critical test cases across different runs

- Distinguish between must-test (payment processing) and nice-to-have (UI animations)

- Test the most common customer journeys

- Maintain a test strategy aligned with business goals

Human testers must curate and maintain the test suite, developing skills to work with AI solutions and formulate AI-supported test strategies. We’ll likely see the emergence of AI trainers—a topic we’ll explore later in this series.

3. Test Execution Limitations

Let’s continue with our ecommerce checkout flow example. AI test suites face several fundamental execution challenges:

Timing and Predictability

While AI can run automated tests, it struggles with dynamic timing scenarios that are business-critical in industries like ecommerce.

For instance, if a payment processor temporarily slows down, AI might fail the test rather than implement intelligent waits.

It may not recognize that a 3-second payment processing delay is acceptable, while a 10-second delay indicates a problem. Without business context, AI can’t make these nuanced timing decisions.

Environmental Dependencies

Consider a test where inventory updates during checkout. The test might pass in isolation but fail when run as part of a larger suite due to database state changes. AI might lack understanding of:

- Which data states need preservation between tests

- How to handle external service dependencies

- When to mock versus use real services

- What constitutes a “clean” test environment

Without this nuanced understanding, results may not be trustworthy. Humans might face similar issues, but they’re expected to understand the test ecosystem more comprehensively to make sense of the results.

Reproducibility Challenges

Even with identical test cases, AI execution might yield different results because:

- It may handle race conditions differently in each run

- Response time variations affect test flow

- Cache states impact behavior

- Third-party service interactions vary

- Database load affects timing

- And many more variables that experienced testers will recognize!

These inconsistencies make it difficult to trust test results and distinguish genuine issues from test flakiness. Again, these problems are fixable but require testers to understand and learn how to work effectively with AI models.

4. The User Experience Gap: Who’s the User?

While AI might excel at testing API calls, database transactions, and other machine-to-machine interactions in our e-commerce system, it fundamentally struggles with human behavior patterns.

Product Search and Filtering

Consider how differently machines and humans approach product search. AI can systematically test that search functions return correct results and narrow down options.

However, it misses the human element. A real user might:

- Misspell “sneakers” as “sneakers” but still expect relevant results

- Apply filters in an illogical order (setting price range after picking size and color)

- Get frustrated with too many results and refine their search multiple times

- Scan images rather than reading descriptions, missing key product details

Here, AI testing focuses on functional correctness but misses the usability aspects that determine whether a user successfully finds and purchases their desired product.

Shopping Cart Management

While AI excels at testing the technical flow of add/remove/update cart operations, it can’t anticipate typical human shopping patterns. Real users often:

- Add items to the cart across multiple sessions over several days

- Switch between devices (mobile to desktop) mid-shopping

- Keep items in the cart as a wishlist with no immediate intention to purchase

- Respond emotionally to shipping costs or delivery dates, modifying their cart contents accordingly

AI can verify that all these operations work correctly from a technical standpoint, but it can’t evaluate whether the experience feels natural and intuitive, or identify friction points that might lead to cart abandonment.

User experience testing is one area I expect to be minimally disrupted by AI tools shortly.

AI’s Black Box Problem: How Much Trust Can We Put in AI?

The biggest limitation of AI in testing may not be the technology itself but the transparency—or lack of it.

Many AI systems operate as black boxes, providing results without explaining their underlying logic. This creates a significant problem when QA teams see test failures or unexpected behavior.

How can we validate results or maintain accountability when the decision-making process remains hidden? An even more profound question: when things go wrong, who bears responsibility? For now, the answer is clear—humans remain accountable. But this creates a dilemma: how can teams or individuals own decisions made by systems they can’t fully understand?

Organizations must address this by establishing policies that clearly define accountability. Today’s standard is “humans supported by AI,” where people serve as the ultimate decision-makers.

But as AI generates and manages increasingly complex test suites, this balance becomes harder to maintain. Even today, QA teams struggle to manage manually written and maintained test suites. What happens when AI accelerates test generation and the volume explodes? Humans will still need to review, customize, and adapt these tests to business needs, but the workload may outpace their capacity.

To succeed in this new world, QA professionals must invest in skills that bridge the gap between AI capabilities and human expertise. And organizations need to rethink their ownership and accountability frameworks to leverage AI without exposing themselves to unnecessary risk.

This transition won’t be easy. It demands flexibility, collaboration, and experimentation—topics we’ll explore in greater detail in some later parts of the series.

Who Is Accountable to Bugs?

AI’s role in finding and managing software bugs presents a paradox for development teams. This double-edged sword manifests in two distinct ways:

Edge 1: False Positives Flood

AI tools can be overzealous and end up flagging issues that aren’t problems:

- These false positives can overwhelm development teams with non-issues

- Developers waste valuable time investigating and attempting to fix non-existent bugs

- Over time, this leads to diminished trust in the AI system, causing real issues to be overlooked

For example, an AI might flag perfectly functional code as buggy due to an unconventional (but valid) coding style or misinterpret intentional edge case handling as an error.

Edge 2: Missed Critical Bugs

Conversely, AI systems sometimes fail to detect genuine critical bugs:

- These false negatives allow real issues to slip through into production code

- AI might miss subtle bugs requiring contextual understanding or domain knowledge

- This can result in diminished software quality and user-facing issues

An example would be an AI missing a logic error in a complex algorithm because it doesn’t understand the underlying business logic, or failing to identify a race condition in concurrent operations.

This duality creates several challenges for development teams:

- Resource allocation puzzle: How do you balance time verifying AI-flagged issues against the risk of missing real bugs?

- Trust vs. vigilance: Developers find themselves caught between trusting the AI and maintaining healthy skepticism

- Calibration conundrum: Reducing false positives often increases false negatives and vice versa

- Blame game: When bugs escape to production, what should you attribute it to—the AI or human oversight?

At its core, AI promises to make QA more efficient, but this efficiency comes with trade-offs. While AI can undoubtedly enhance testing processes, it also raises fundamental questions about trust, oversight, and the limits of automation. The key to success lies in finding the right balance between human expertise and AI capabilities—a topic we’ll explore further in this series.

Can AI Code Efficiently and What Are The Limitations for AI Code Generation?

Short answer: AI can’t write perfect code beyond super simple business requirements—yet.

Of all areas in software engineering, code writing is experiencing the most dramatic change due to AI. Tools like ChatGPT and Copilot speed up development, but speed must be balanced with accuracy to avoid compromising customer experiences.

With clear, well-defined user stories, AI can generate functional code faster than ever before. For example:

An AI system can generate code to meet these requirements, covering scenarios for successful logins and error handling for invalid credentials. At first glance, this looks complete and impressive. But under closer inspection, you can see the critical gaps:

- Security events: The AI may not properly log security events, potentially leaving systems vulnerable to breaches.

- Localization standards: Error messages might not align with organizational localization standards, compromising usability across regions.

- Edge cases: AI typically misses nuances such as integrating with MFA systems or handling time-based account lockout resets.

This is precisely where human expertise becomes irreplaceable. QA professionals don’t merely fix what AI missed—they refine, validate, and stress-test AI-generated code to ensure it is:

- Robust: Can handle edge cases and unexpected scenarios.

- Maintainable: Written in a way that follows best practices for future updates.

- Business goals: Match organization and user expectations.

In short: AI is speeding up code but still needs human oversight to bridge the gap between functional and perfect. QA professionals are the key to making AI-generated code meet real-world quality and reliability standards.

Beyond the Limitations: What Can AI Do?

After reviewing these limitations, let’s pause for a moment.

Reading this after my coffee break, I realized it might sound like AI offers little value in QA. That’s not the case.

AI’s capabilities in testing are remarkable and transformative—as I’ve detailed in previous blogs. The purpose of this analysis isn’t to dismiss AI’s value but to identify where human expertise must fill critical gaps.

Specialized AI tools designed specifically for testing like KaneAI tend to handle these challenges better than general-purpose AI models. Just as we trust specialists over generalists in medicine, the same principle applies to AI solutions in QA.

Selecting the right AI tool stack can save significant time, and money, and prevent lost focus. With the rise of AI in Software testing, its crucial to stay competitive by upskilling or polishing your skillsets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

The Human Advantage: Areas Where QA Professionals Remain Essential

We are still quite far from being able to completely replace human judgment. Here are some areas where human advantage becomes paramount.

Strategic Decision Making

Testing strategy requires deep business understanding and judgment that AI currently cannot replicate. Organizations rely on human expertise for:

- Developing testing strategies aligned with broader business objectives and risk tolerance—for instance, deciding whether to prioritize security testing for financial applications or focus on performance testing for streaming services

- Making nuanced resource allocation decisions based on team capabilities, project constraints, and business priorities—including determining when to use AI tools versus performing manual testing

- Determining optimal test timing and scope while considering factors like market pressure, release schedules, and stakeholder needs

- Taking accountability for testing decisions with legal and business implications, as AI cannot serve as the responsible party in compliance or audit scenarios

Stakeholder Communication

The human element remains irreplaceable in stakeholder interaction for requirement analysis where emotional intelligence and contextual understanding are crucial:

- Translating complex technical findings into business-relevant insights that resonate with different stakeholder groups—from developers to C-suite executives

- Negotiating and adjusting testing priorities based on evolving business needs and constraints while maintaining productive relationships across teams

- Managing expectations and building trust with non-technical stakeholders who need clear explanations of testing processes and results

- Facilitating productive discussions about quality trade-offs and risk management that require understanding organizational dynamics and politics

Exploratory Testing

Exploratory testing epitomizes creativity, intuition, and adaptability—distinctly human traits. While AI can automate test cases and suggest scenarios, it can’t think critically to uncover the unexpected. Human testers bring unique perspectives to identify edge cases and user behaviors that emerge from real-world usage patterns.

I expect the following areas to remain firmly in the human realm for the foreseeable future:

- Identifying unexpected user behaviors and edge cases that emerge from real-world usage patterns rather than documented requirements

- Adapting testing approaches on the fly based on discoveries and insights gained during the testing process

- Recognizing subtle patterns and potential issues that might not be apparent in automated testing results

- Applying domain knowledge and user empathy to uncover scenarios that automated tools might miss

Ethical and Legal Compliance

The ethical and legal landscapes require nuanced interpretation and accountability—areas where human oversight remains essential.

While AI can perform algorithm-based checks, it can’t replace the judgment required to interpret regulations or anticipate ethical dilemmas. I expect the following to remain with human testers:

- Interpreting and applying regulatory requirements that often demand contextual understanding and nuanced interpretation

- Evaluating potential biases in AI systems and ensuring fair treatment across diverse user groups

- Assessing privacy implications and data handling practices against evolving legal frameworks and ethical standards

- Maintaining accountability for compliance decisions, as regulators and stakeholders expect human oversight of critical systems

Ultimately, compliance represents a responsibility that regulators and stakeholders expect humans to shoulder, especially in high-risk industries like healthcare, finance, and government.

Human-AI Partnership: Performance and Security Testing

AI demonstrates remarkable efficiency in performance and security testing, automating large-scale tests and identifying vulnerabilities at unprecedented speed.

However, neither domain can be entrusted entirely to AI—both require human expertise to interpret results, understand context, and make informed decisions.

AI is super efficient at performance and security testing, automating large tests, and finding vulnerabilities at speed. But neither can be left to AI alone—both need human expertise to interpret results, understand context, and make decisions.

Performance Testing

AI can execute performance tests at scale, but interpreting the results demands human insight. Authentic performance testing goes beyond raw metrics. It’s about user experience and aligning technical performance with business objectives. Human testers play essential roles in:

- Converting raw performance data into real-world insights with the help of AI tools.

- Setting performance thresholds based on business goals and user needs.

- Providing architectural recommendations for long-term stability and scalability.

By combining the power of AI with the human context of testers, performance testing becomes a force for great user experiences.

Security Testing

AI is great at running security scans and finding vulnerabilities but security testing is about more than detection. You need a way to understand context and risk. Human expertise is key to:

- Test within the organization’s unique risk profile and priorities.

- Test the real-world exploitability of identified security issues to understand the true impact.

- Design test scenarios for emerging attack patterns and advanced threats.

- Analyze complex attack chains and multi-layered vulnerabilities that require human interpretation to resolve.

While AI’s role in security testing is increasing, accountability and judgment are still very much human. Security testing requires the kind of contextual and adaptive thinking that only humans can bring.

This Human-AI partnership is transforming performance and security testing—but the story doesn’t end here. In future posts, we’ll examine how this evolution is reshaping testing practices and what it means for QA teams.

Cultural and Accessibility Testing

When it comes to cultural and accessibility testing, human insight is key. AI can help with localization or do basic accessibility checks but can’t understand cultural nuances or lived experiences.

Human testers bring:

- Cultural awareness and sensitivity to design, content, and interactions.

- Accessibility testing through the eyes of diverse users, including those with disabilities.

- Beyond linguistic translation to capture cultural context and relevance in localization.

Creating inclusive and culturally relevant user experiences requires empathy, creativity, and an understanding of the many perspectives that shape human behavior.

While AI can help, these human qualities can’t be replicated in delivering meaningful and fair solutions.

New Approaches to Testing

The testing landscape is changing fast, driven not just by AI but by new technologies, platforms, and methodologies. While AI can automate processes and do tasks at scale, the creative leap of inventing and adapting testing approaches is a uniquely human superpower.

QA professionals (and most technology-related roles) have been adapting for years—the key difference now is the speed of change.

Testers are leading (and will continue to lead) in:

- Inventing strategies for technologies and platforms that AI hasn’t encountered

- Building hybrid testing frameworks that leverage AI’s speed and accuracy alongside human intuition and context

- Identifying gaps and developing solutions to address them

- Managing risks associated with combining these new technologies

Cross-functional Collaboration in Testing

Testing thrives on effective coordination across teams, where human skills remain irreplaceable (and AI’s role is minimal).

Building relationships: Creating trust between development, operations, and business teams through meaningful interactions and shared goals. This includes establishing informal communication channels that accelerate problem-solving and minimize bottlenecks.

Knowledge sharing: Facilitating the exchange of testing insights and best practices across projects. This involves creating accessible documentation and organizing knowledge transfer sessions that bridge different technical backgrounds.

Coaching and mentorship: Providing hands-on guidance to team members while adapting to individual learning styles. This includes helping developers improve their unit testing and supporting analysts in writing testable requirements.

Technical translation: Converting complex testing concepts into clear business impacts and risks that resonate with different stakeholder groups. This creates shared understanding across technical and non-technical teams.

Managing priorities: Navigating competing demands between teams while maintaining positive relationships. This includes building consensus on testing approaches and mediating conflicts about resources and timelines.

Wrapping Up

As we conclude this post, one thing becomes clear: the future of QA roles is anything but static. Some positions may disappear as AI continues to automate routine tasks. Some roles might remain largely unchanged, though they’ll likely be fewer than we’d like to believe. The most significant shift will occur in roles that must evolve—and that’s probably the majority of them.

As AI reshapes the testing landscape, many of us will need to adapt, learn new skills, and even rethink how we approach our work. In the next post, we’ll take a closer look at this shifting landscape and start to make sense of what the future holds for QA professionals navigating this change.

Results from my comparison of ChatGPT, CoPilot & Claude

Remember that experiment I mentioned at the beginning? Here are the results: Total Test Case Count:

- ChatGPT: 21 cases

- Copilot: 18 cases

- Claude: 17 cases

Overall Analysis (based on Claude’s analysis of the 3 results): Best Coverage Areas:

- Copilot: Excelled in UI/UX and basic functionality

- ChatGPT: Strongest on-edge cases and security

- Claude: Best on system integrity and performance

These results highlight the varied nature of general-purpose AI tools. If you are considering including AI tools in your tool stack, consider exploring specialized tools like KaneAI. They’re designed specifically for QA use cases and may deliver even more tailored and impactful results.

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests