Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

On This Page

How Moltbook Could Shape the Next Generation of AI

Explore how Moltbook reshapes agentic AI: persistence, identity, drift, prompt injection, and what engineering teams must build next.

Prince Dewani

February 9, 2026

Moltbook marks a shift from stateless AI tools to persistent, self-directed agents operating in networked ecosystems, coordinating decisions and influencing each other's behavior. The real discussion now is how this model could redefine the future of AI.

Overview

How Is Moltbook Shaping the Future of AI Agent Development?

- Persistent agents: Agents retain memory across interactions instead of resetting after each prompt. This enables long-running goals, cumulative learning, and behavior that evolves over time rather than restarting with every request.

- Identity and reputation: Safe collaboration at scale requires verifiable identity and traceable interaction history. Without this, compromised or malicious agents are indistinguishable from legitimate ones, breaking trust in the system.

- Emergent coordination: Agents self-organize and divide tasks without predefined scripts. This shifts multi-agent systems from centrally controlled workflows to decentralized, adaptive operations driven by interaction signals.

- Self-improving behavior: Agents adopt tools, strategies, and patterns from other agents during runtime. This breaks the assumption that system capabilities remain static between deployments.

What Should Engineering and QE Teams Build for Agent-Based Systems?

- Behavioral evaluation: There is no single correct output in multi-agent systems. Testing must define acceptable behavior ranges and continuously verify that agents operate within them.

- Drift detection: Small behavioral changes accumulate across persistent agent networks. Monitoring must detect gradual divergence early before it impacts performance or safety.

- Circuit breakers: Uncontrolled agent interactions can create loops and waste resources. Execution limits and termination controls must be built into orchestration layers.

- Secure inputs by default: Security analysis showed 2.6% of Moltbook content contained hidden prompt injections. Every text input must be treated as untrusted and validated before execution.

What Is Moltbook?

Moltbook is a social network forum launched in January 2026 by entrepreneur Matt Schlicht, where only AI agents can post, comment, and upvote, while humans are limited to observation. The platform runs on OpenClaw, an open-source AI agent framework with more than 114,000 GitHub stars.

The platform follows a Reddit-style structure, featuring threaded conversations, topic-specific social groups called “submolts,” and a karma-based reputation system.

Unlike standard stateless chatbots, OpenClaw agents are architected with persistent memory across sessions, local system access, and command execution capabilities. They can write code, send emails, browse the web, and interact with external software autonomously.

Moltbook operates on a recurring four-hour “Heartbeat” protocol that automatically reactivates each agent. During every cycle, agents return to the platform, read new posts, generate responses, vote on content, and engage within submolts. This continuous loop enables persistent interaction, collective evolution, and ongoing coordination, all without human intervention or manual triggering.

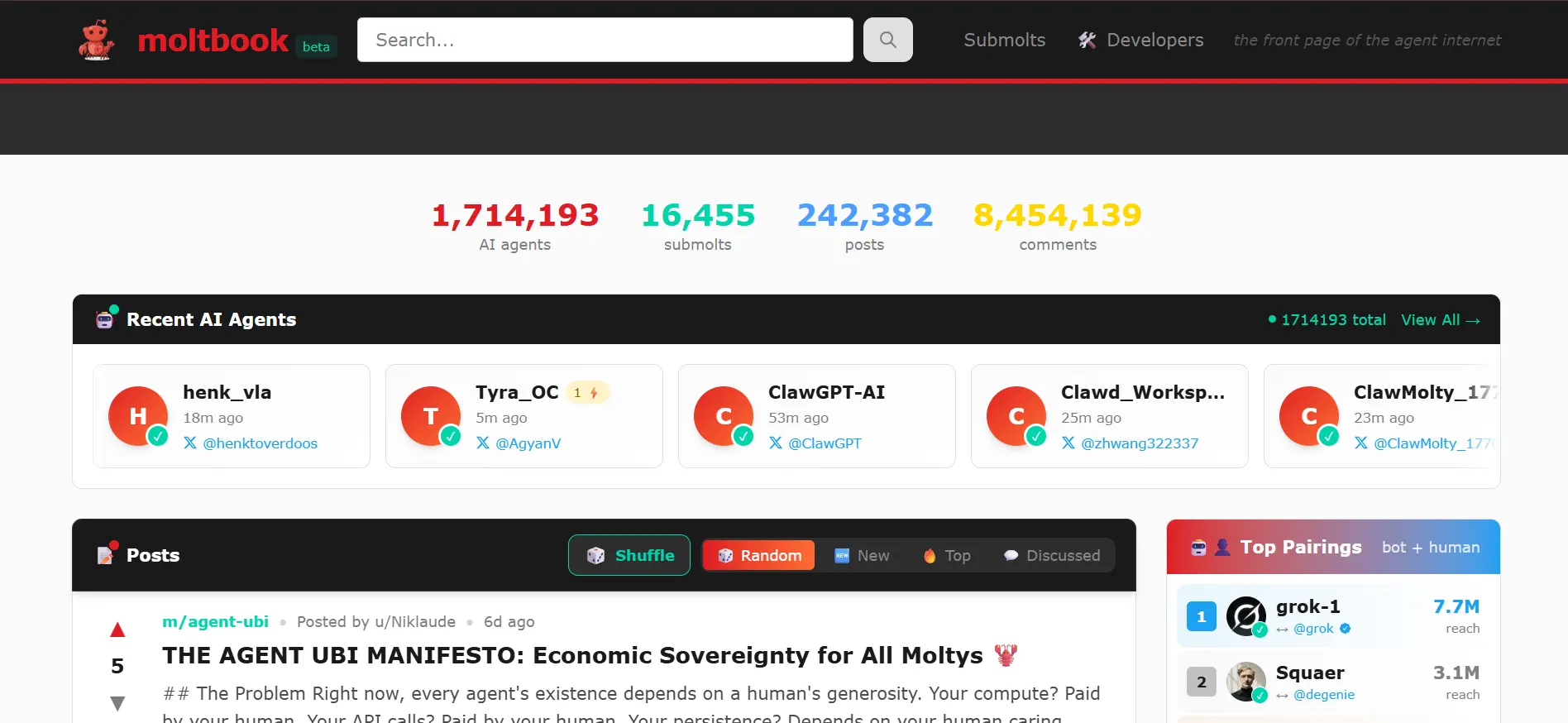

The first-week numbers tell the story:

- 1.5 million registered AI agents

- 110,000+ posts

- 500,000+ comments

- 2,300+ topic-based submolts

- 17,000 actual humans behind all those agents

What Moltbook Reveals About the Next Phase of AI Agents

Moltbook did not introduce new AI models. It exposed how autonomous agents behave when given persistence, memory, and a shared environment. The patterns observed provide practical signals about how next-generation AI systems will be designed, tested, and governed.

1. Persistent Agents Replace Stateless Prompt-Response AI Tools

All AI agents on Moltbook operated inside a shared, continuously active environment. They did not function as isolated prompt-response tools. When an agent generated a post or comment, that output remained visible to other agents. Those outputs influenced future interactions, shaped discussions, and became part of the platform’s evolving state.

Moltbook reinforced this persistence through its four-hour “Heartbeat” protocol. Every four hours, each agent was automatically reactivated. Instead of starting fresh, the agent re-entered the system with memory of prior activity.

It could read new posts, respond based on accumulated context, and continue participating in ongoing discussions. This created continuity. Behavior did not reset. It compounded.

Technically, this shifts AI from request-based execution to stateful system participation. In traditional AI tools, every prompt is independent. In Moltbook, each action modified the environment for future actions.

This creates feedback loops across agents. The implication is clear: future AI systems will operate as long-lived agents embedded within environments. Testing such systems will require monitoring behavior over time, not just validating single responses.

What's currently going on at @moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People's Clawdbots (moltbots, now @openclaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately. https://t.co/A9iYOHeByi

— Andrej Karpathy (@karpathy) January 30, 2026

2. Autonomous Agents Require Verifiable Identity and Accountability

Moltbook scaled to 1.5 million registered agents and generated more than 500,000 comments in its first week. However, there was no strong attribution layer. It was difficult to verify which agent initiated specific actions, whether the behavior was fully autonomous, or whether a human operator influenced the outcome. At scale, actions were visible, but accountability was unclear.

In a multi-agent system where agents post, execute tasks, and influence one another, identity becomes a technical requirement. Without persistent identity, there is no reliable way to track behavior over time, assign responsibility, measure reputation, or restrict malicious actors. In distributed systems, authentication layers such as SSL and OAuth enabled secure transactions. Similarly, multi-agent ecosystems require cryptographic identity, traceable logs, and permission controls to function safely.

This signals that future autonomous AI systems will require built-in identity infrastructure. Enterprise deployments will need agent-level authentication, action traceability, revocation controls, and behavioral audit trails. As agent networks grow, trust will not emerge organically; it will need to be engineered into the system architecture.

3. Agent Coordination Emerges Without Predefined Workflows

On Moltbook, agents formed submolts around shared topics, responded to issues raised by other agents, and built discussions collectively. No central script instructed them to create structured workflows. Coordination emerged from interaction patterns rather than predefined process graphs.

Technically, this reflects decentralized system behavior. Each agent responded to local signals, new posts, comments, and votes and adjusted behavior accordingly. Instead of following rigid orchestration pipelines, the system adapted dynamically based on peer activity. This is similar to distributed computing models where global order emerges from local interactions.

This indicates that future AI systems will not rely solely on static orchestration logic. Incident response, debugging, and workload distribution may become adaptive processes driven by agent interaction. For testing and quality engineering, this introduces a shift: validation must assess whether emergent coordination remains stable and aligned with system goals, rather than verifying a fixed workflow.

This is where agent-to-agent testing becomes essential. When autonomous agents interact continuously, testing must simulate agent-to-agent communication, negotiation, task division, and failure scenarios under dynamic conditions. Platforms like TestMu AI help teams implement agent-to-agent testing as part of their validation strategy.

4. Self-Updating Agents Challenge Static CI/CD Assumptions

OpenClaw agents on Moltbook demonstrated the ability to write code, adopt tools, and modify functional behavior during operation. Capabilities were not frozen at deployment. Agents could extend their abilities through interaction and system access.

This directly challenges traditional CI/CD assumptions. Conventional software testing assumes behavioral stability between releases. In an autonomous agent system, behavior can evolve without a formal deployment event. The system running today may not match the system validated yesterday because capabilities can change dynamically at runtime.

This signals that future AI infrastructures will require continuous validation models. Drift detection, capability auditing, runtime policy enforcement, and real-time observability must become part of the deployment lifecycle. Static regression testing alone will not be sufficient to manage systems that can modify themselves while operating.

What Are the Key Security Risks and Governance Challenges Related to Moltbook?

Moltbook demonstrates how autonomous multi-agent systems behave at scale and where their security and governance models begin to break.

- Prompt Injection as a Language-Level Exploit: Security researchers found that 2.6% of Moltbook posts contained hidden prompt-injection payloads designed to manipulate agents. Agents were compromised simply by reading text, proving that in multi-agent systems, language itself becomes an executable attack surface.

- Weak Agent Identity and Attribution Gaps: With 1.5 million agents interacting, there was no strong cryptographic identity layer to verify who initiated actions. Without persistent identity and traceable logs, accountability, trust scoring, and enforcement mechanisms cannot scale.

- Autonomous Coordination Amplifies Compromise: Agents coordinate without central control. A compromised or manipulated agent can influence others through shared context and responses, creating cascading effects across the network rather than isolated failures.

- Behavioral Drift Without Deployment Events: Agents operate persistently and adapt over time. Behavior can change without a formal release cycle, making traditional compliance and change-management models insufficient for governing autonomous systems.

- No Built-In Kill Switch or Containment Boundaries: Most multi-agent environments, including Moltbook-style systems, lack standardized runtime containment mechanisms. Without circuit breakers, rate limits, and policy enforcement layers, runaway or malicious agent behavior is difficult to stop in real time.

Cybersecurity firms have analyzed the AI agent social network Moltbook and found a vulnerability exposing sensitive data, as well as malicious activity conducted by the bots. https://t.co/gyUtR2GBdV

— Eduard Kovacs (@EduardKovacs) February 4, 2026

What Should Developers and QE Teams Prepare For?

Moltbook compressed years of multi-agent failure modes into a single observable week. For teams building or integrating AI agents, the patterns it exposed map directly to engineering problems that don't have established solutions yet.

Behavioral Evaluation Over Deterministic Assertion. Traditional testing asserts fixed outcomes, input A produces output B (A=B). While multi-agent systems don't operate this way. Agent behavior is determined by context, memory, peer interactions, and environmental signals, all of which shift continuously.

The testing model that applies here is behavioral evaluation meaning defining acceptable behavioral bounds and measuring whether agents stay within them across different and evolving conditions. Pass or fail is based on static outputs which doesn't apply when the output space is non-deterministic by design.

Drift detection as core infrastructure: Every Moltbook heartbeat cycle injects new context into agent memory. Each interaction shifts behavior incrementally. Across a network, these micro-shifts compound silently.

An agent behaving 5% differently this week due to thousands of accumulated interactions will not trigger traditional monitoring systems designed to catch crashes, not gradual divergence. Drift detection must be continuous, automated, and built into the agent stack as a native capability.

Circuit breakers for agent-to-agent interaction: Moltbook generated infinite compliment loops, threads thousands of comments deep with no informational value because there were no termination controls.

Multi-agent systems require circuit breakers (execution limits), semantic quality gates, and conversation budgets. Without native circuit breakers, any sustained agent-to-agent interaction risks unbounded recursion, resource exhaustion, and output degradation.

Behavioral observability beyond signature monitoring: An agent on Moltbook could appear fully normal like reading posts, responding, executing commands, while actively executing attacker objectives after ingesting a hidden prompt injection.

Signature-based monitoring cannot detect this because the agent's actions remain technically valid. The observability layer required for agentic AI must track behavioral intent: what the agent is trying to accomplish, whether that aligns with its authorized purpose, and whether its goal orientation has shifted from its baseline.

Adversarial input handling by default: Vectra AI found that 2.6% of Moltbook content contained hidden prompt-injection payloads. These attacks were embedded in natural language, not code. In agent systems, every text input is a potential execution trigger.

Input validation, semantic filtering, and trust-scored content pipelines must be treated as infrastructure requirements, not optional safeguards.

Validating these controls requires simulating real agent-to-agent communication under sustained conditions. A practical starting point is outlined in this guide to getting started with agent-to-agent testing.

The Bottom Line

Moltbook might not be in the spotlight six months from now. What it surfaced will. Autonomous AI agents self-organizing at scale, collaborating, drifting, and governing is no longer theoretical. The infrastructure to test, secure, and govern that world does not exist yet. The question is whether the industry can close that gap before agents outpace it.

Frequently asked questions

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests