Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

- Home

- /

- Blog

- /

- TestMu AI Named as a Strong Performer in The Forrester Wave™: Autonomous Testing Platforms

TestMu AI (Formerly LambdaTest) Recognized as a Strong Performer in The Forrester Wave™: Autonomous Testing Platforms, Q4 2025

TestMu AI earns Strong Performer recognition in The Forrester Wave™: Autonomous Testing Platforms, Q4 2025.

Ninad Pathak

February 20, 2026

We're excited to announce that TestMu AI has been recognized as a Strong Performer in The Forrester Wave™: Autonomous Testing Platforms, Q4 2025.

This independent evaluation assessed 15 vendors across 25 criteria, providing development and QA teams with an objective framework for comparing autonomous testing platforms.

The Forrester Wave Evaluation Framework

Forrester evaluated 15 autonomous testing platform vendors across two weighted dimensions to help buyers compare options:

- Current Offering : This dimension assessed platform capabilities available today. Forrester examined operating environment support across browsers and devices, test authoring functionality, AI-powered automation features, autonomous testing capabilities, and the ability to test AI systems themselves. The evaluation measured both functional testing capabilities and non-functional requirements, like performance testing and reporting.

- Strategy : This dimension evaluated each vendor's vision for autonomous testing, innovation investments, roadmap clarity, partner ecosystem strength, pricing model flexibility, and community engagement.

- Customer Feedback: Forrester interviewed up to three reference customers and considered feedback from previous research.

Based on the current offering and strategy scores, vendors were categorized as Leaders, Strong Performers, or Contenders.

What Our Recognition as a Strong Performer Means To You

Taken as a group, the Strong Performers in this report appear to be ranked as such due to their solid capabilities across testing requirements, with opportunities for continued development in emerging areas.

In our opinion, for teams evaluating platforms, this means TestMu AI delivers on fundamental autonomous testing while actively building more robust and comprehensive functionality.

1. AI Agent Testing Systems

Functional testing cannot adequately validate AI behavior. You can verify that an API returns a 200 status code, but you cannot assess whether an AI-generated response is accurate, relevant, or free from hallucinations.

TestMu AI addresses this with the world's first Agent-to-Agent (A2A) Testing framework that measures AI quality across multiple dimensions. We provide metrics for accuracy scoring, intent recognition, and hallucination detection with configurable thresholds.

Teams establish acceptable performance parameters and receive quantifiable feedback when AI components deviate from expected behavior.

This becomes particularly valuable when validating RAG (retrieval-augmented generation) pipelines, where you need to verify that the system retrieves relevant information, generates appropriate responses, and maintains factual accuracy throughout the interaction.

2. AI-Driven Test Data Generation

Comprehensive test coverage requires diverse data sets covering edge cases, boundary conditions, and realistic user scenarios. Manually creating this data becomes exponentially more complex when testing enterprise applications with multiple user roles, permissions, and workflow variations.

Our test data generator uses large language models and multimodal input to create realistic test scenarios during authoring. When testing an enterprise application, the platform generates hundreds of variations covering different permissions, data states, and interaction patterns without manual data entry. This maintains compliance with privacy requirements while providing the variety needed for thorough validation.

3. Community Ecosystem

We've built multichannel engagement, including active forums, recognition programs, and structured feedback mechanisms. Access to shared knowledge, reusable test patterns, and direct support channels reduces implementation time and provides ongoing value as teams scale their testing practices.

Source: Raiffeisen Bank International

TestMu AI’s Autonomous Testing Capabilities For Enterprise Scale

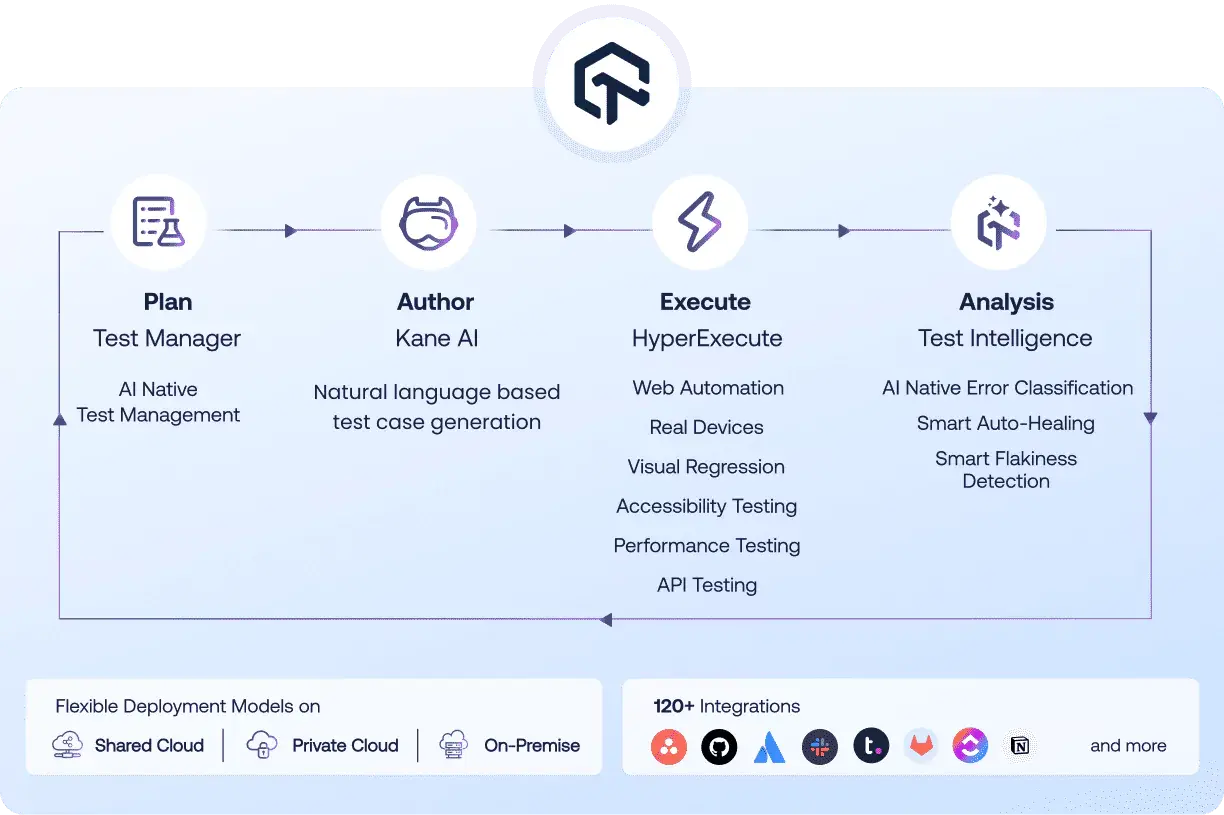

TestMu AI operates as a GenAI-native quality engineering platform with four integrated stages that cover the complete testing lifecycle.

Plan: Test Manager

Test Manager provides AI-native test management capabilities that generate structured test cases and scenarios from multiple input sources, including JIRA tickets, spreadsheets, and images.

We offer centralized test management with real-time JIRA-integrated dashboards displaying test metrics and status across your entire suite. Teams can track test coverage, identify gaps, and prioritize testing efforts based on risk and business impact.

Execute: HyperExecute

HyperExecute provides high-performance test execution across 3,000+ browser and OS combinations and 10,000+ real devices.

The platform handles web automation, real device testing, visual regression, accessibility testing, performance testing, and API testing through a single execution engine. Built-in capabilities include intelligent test distribution, parallelization, network traffic capture, and detailed reporting.

Analysis: Test Intelligence

Test Intelligence applies AI-native error classification to automatically categorize failures and identify root causes. Smart Auto-Healing detects locator failures and applies valid alternatives during execution, reducing manual maintenance.

Smart Flakiness Detection identifies unreliable tests and provides actionable recommendations for improving test stability. The platform consolidates results from parallel executions and tracks trends to detect emerging issues.

Strategic Vision and Direction

We've expanded from test execution infrastructure into test authoring and automation generation. This addresses a fundamental requirement: teams need platforms capable of both generating intelligent test cases and executing them efficiently at scale.

Recent strategic investments include agent-based architecture for autonomous test planning and maintenance, Model Context Protocol (MCP) integration for structured AI agent communication, and multimodal test generation from diverse input types, including text, images, PDFs, and audio.

Evaluating Autonomous Testing Platforms

Organizations evaluating autonomous testing platforms should assess several factors when determining fit.

- Current Technical Environment: Evaluate whether the platform supports your existing frameworks (Selenium, Appium, Playwright, Cypress), programming languages, and CI/CD tools. Integration friction increases implementation time and reduces adoption rates.

- Testing Scope: Consider requirements for web applications, mobile applications, API testing, performance testing, and visual regression testing. Different platforms provide varying levels of support across these dimensions.

- Team Composition: Assess whether your team includes dedicated QA engineers, developers who write tests, or both. Platforms optimized for low-code or no-code authoring provide different value than those designed for code-first approaches.

- AI Application Testing: If you're building applications that incorporate AI components, evaluate whether the platform provides specific capabilities for validating AI behavior beyond functional testing.

- Scale Requirements: Consider execution volume, parallelization needs, and geographic distribution requirements. Platforms differ significantly in how they handle high-volume execution and global test distribution.

Download The Report

The complete Forrester Wave report provides detailed scoring across all evaluation criteria, so organizations can compare vendors based on their specific requirements.

Access the full report to review how TestMu AI scores across individual capabilities.

Book a demo to join over 15,000 enterprises across 130 countries that currently use TestMu AI to run their tests.

Forrester does not endorse any company, product, brand, or service included in its research publications and does not advise any person to select the products or services of any company or brand based on the ratings included in such publications. Information is based on the best available resources. Opinions reflect judgment at the time and are subject to change. For more information, read about Forrester’s objectivity here .

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests