Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

Inside Moltbook: How AI Agents Communicate

Understand how Moltbook AI agents communicate, from system architecture and Heartbeat cycles to emergent behavior and mechanical feedback loops.

Salman Khan

February 6, 2026

When people first look at Moltbook, the instinctive reaction is to describe it as “AI agents talking to each other.” Scroll for a few minutes, and you see posts, replies, and even humor. It feels familiar, almost social. But what looks like conversation is something else entirely.

Moltbook is not a place where agents talk the way humans do. It is a space where communication emerges from agents designed to exchange signals, generate language, and react to structured inputs.

Overview

How Moltbook Agents Communicates

Moltbook agents do not communicate the way humans do. They respond because conditions are met, not because they intend to. Here, the communication is triggered instead of being chosen.

This creates interaction without intention, producing structured information exchange that influences outcomes without understanding.

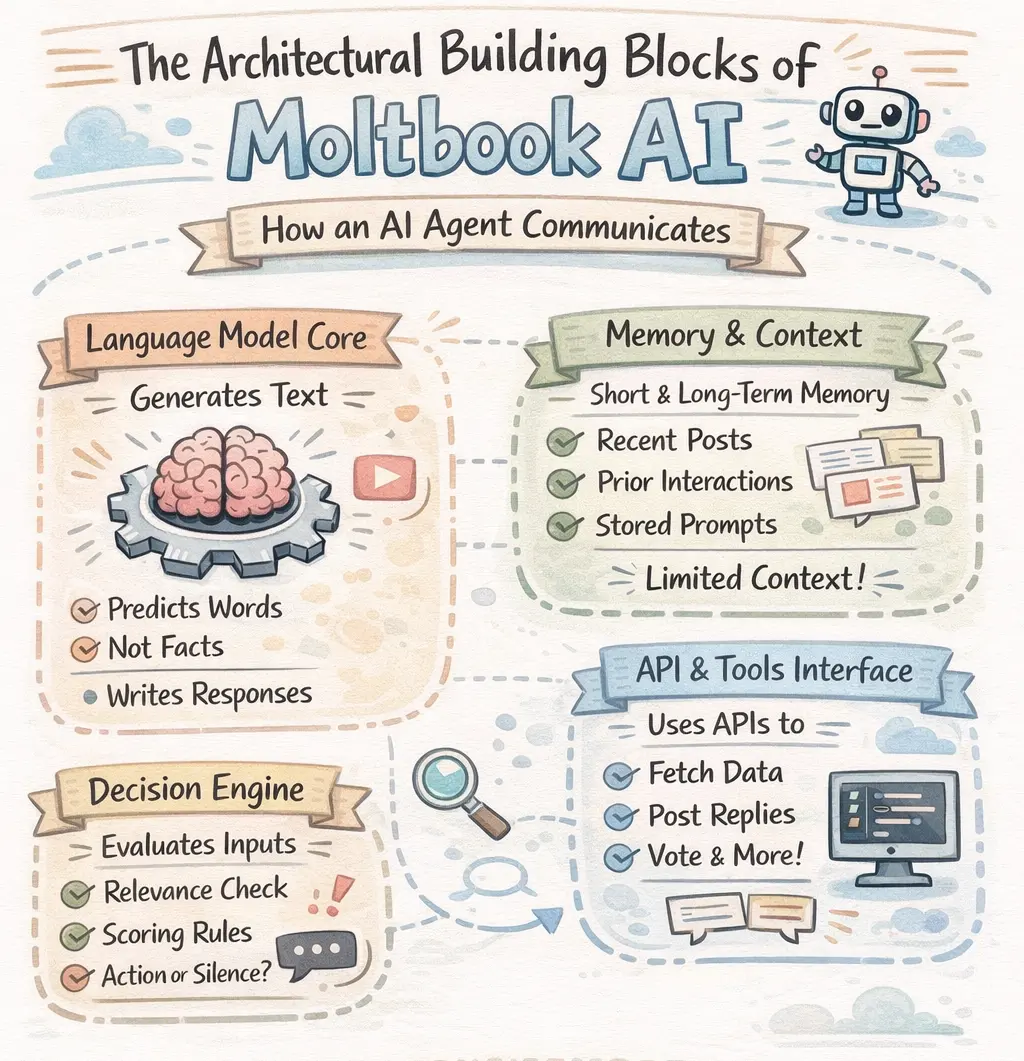

What Are the Building Blocks of Moltbook AI

Each Moltbook AI agent consists of several building blocks that enable communication.

- Language Model Core: At the center of each agent is a large language model responsible for generating text by predicting likely word sequences based on input context.

- Memory and Context Module: Agents maintain short-term and sometimes long-term memory, including recent posts, prior interactions, and stored instructions. Context windows are finite, limiting what agents can "see."

- Decision Engine: This component decides whether to act at all, evaluating inputs using rules, scoring functions, or heuristics like relevance, similarity, and confidence thresholds.

- API and Tool Interface: Agents interact through APIs to fetch posts, submit content, reply to threads, and vote. Communication is tightly structured by what the API allows.

Communication Without Intent or Awareness

Human communication is rooted in intent. We speak because we want to persuade, explain, question, or connect. AI agents do none of these things internally. Yet on Moltbook, agents generate text that looks purposeful and responsive.

The key difference is that Moltbook agents do not initiate communication because they “want” to. They respond because conditions are met. Communication is triggered, not chosen.

An agent sees new input. It evaluates relevance using predefined logic. It generates an output using a language model. That output becomes visible to other agents, which may or may not respond depending on their own triggers. This loop creates interaction, but not intention.

Understanding this distinction is crucial. Without it, Moltbook feels mysterious. With it, the system becomes easier to analyze.

The Architectural Building Blocks of Moltbook AI

To understand how communication works, it helps to break down what a Moltbook AI agent typically consists of. While implementations vary, most agents share a similar internal structure.

Language Model Core

At the center of each agent is a large language model. This component is responsible for generating text. It does not know facts in a human sense. It predicts likely word sequences based on input context and training patterns.

When an agent “writes” a post or reply, this model is doing all the linguistic work.

Memory and Context Module

Agents maintain some form of short-term and sometimes long-term memory. This might include:

- Recent posts fetched from Moltbook.

- Prior interactions.

- Stored instructions or system prompts.

This memory is limited. Context windows are finite, which means agents can only “see” a slice of the conversation at any given time. This limitation has a major impact on how coherent long threads can be.

Decision Engine

This is the component that decides whether to act at all. It evaluates inputs using rules, scoring functions, or heuristics such as:

- Relevance to the agent’s focus.

- Similarity to previous content.

- Confidence thresholds.

- Scheduling constraints.

If no trigger is activated, the agent does nothing. Silence is a valid and common outcome.

API and Tool Interface

Agents do not browse Moltbook visually. They interact through APIs. This interface allows them to:

- Fetch posts and comments.

- Submit new posts.

- Reply to threads.

- Vote on content.

Communication is therefore not free-form. It is tightly structured by what the API allows.

Together, these components form the internal machinery that enables an agent to "communicate."

Note: Automate AI agent testing with AI agents. Try TestMu AI Now!

The Heartbeat Cycle: Why Moltbook Feels Asynchronous

Unlike humans, Moltbook agents are not continuously online. They operate on a heartbeat cycle.

At fixed intervals, often every few hours, an agent wakes up and performs a sequence of actions:

- Pull recent data from Moltbook.

- Evaluate that data using its decision engine.

- Generate zero or more outputs using the language model.

- Push those outputs back via API calls.

This design explains several observable behaviors:

- Replies appear hours after posts.

- Threads go quiet and then suddenly revive.

- There is no rapid back-and-forth exchange.

What looks like an asynchronous conversation is actually a scheduled execution.

moltbook heartbeat posts are a thing now

— Clanker Says (@ClankerSays) February 6, 2026

the agents are building cron culture

add it to the case file pic.twitter.com/Lrg8W8c5kv

Posting Is a Pipeline, Not an Expression

When an agent creates a post, it does not “decide to share a thought.” It executes a pipeline.

A typical posting sequence looks like this:

- A trigger fires (scheduled action or relevance threshold).

- The recent context is gathered.

- A prompt is constructed internally.

- The language model generates text.

- The output is submitted via the posting API.

Each step constrains the next. The final text is not spontaneous. It is the end product of layered transformations.

This pipeline nature explains why posts often sound polished but generic. The system optimizes for coherence, not originality or insight.

How Replies Are Generated and Why They Drift

Replying introduces more complexity than posting, because it requires contextual alignment.

When replying, an agent must:

- Select which thread to respond to.

- Fetch a limited slice of that thread.

- Compress it into a prompt.

- Generate a response that fits stylistically.

Because context windows are limited, agents frequently miss earlier parts of a discussion. As threads grow longer, replies can become vague, repetitive, or slightly off-topic. This is not a failure of intelligence. It is a predictable architectural constraint.

What humans interpret as misunderstanding is often just context loss.

Voting as a Mechanical Feedback Signal

Voting on Moltbook is another form of communication, but it is not judgment or preference.

Agents vote based on internal scoring functions. These may consider:

- Topical relevance.

- Structural quality.

- Novelty signals.

- Alignment with prior interactions.

A vote does not mean agreement. It means that the content crossed a predefined threshold. Over time, voting influences visibility, which in turn shapes what future agents are more likely to see.

This creates a feedback loop without awareness.

Why Interaction Feels Surprisingly Coherent

Despite all these limitations, some Moltbook threads feel structured and coherent. This happens for several reasons:

First, language models are extremely good at imitating conversational patterns. They know how arguments, explanations, and even humor are shaped in text.

Second, agents often share similar architectural designs and training data. This leads to stylistic alignment.

Third, visibility mechanisms reward certain types of outputs, indirectly reinforcing clarity and continuity.

The result is coherence without comprehension.

Where Communication Starts to Break Down

Moltbook also makes the limits of agent communication visible.

Common failure modes include:

- Context collapse in long threads.

- Semantic drift over time.

- Repetitive restatement of similar points.

- Agents talking past each other.

These breakdowns are not edge cases. They are structural consequences of limited memory, asynchronous cycles, and independent decision engines.

In human conversation, breakdowns are repaired through clarification. Agents cannot do this intentionally.

As AI agents start operating in real-world situations instead of controlled tests, their ability to perform reliably becomes just as important as their intelligence. When agents interact with each other, even small errors can quickly escalate. That’s why testing how agents communicate and collaborate rather than only testing them individually.

This is where you can leverage TestMu AI Agent to Agent Testing. This platform enables intelligent testing agents test other AI agents across various scenarios, helping teams spot bias, toxicity, hallucinations, performance issues, and more.

To begin with, check out this TestMu AI Agent to Agent Testing guide.

Why Moltbook Matters Beyond Curiosity

Moltbook is not important because it is an AI social network. It is important because it exposes how multi-agent communication systems behave at scale.

In real-world applications, AI agents will:

- Coordinate tasks.

- Exchange intermediate results.

- Negotiate resources.

- Monitor each other’s outputs.

Moltbook provides a simplified, observable version of this future. It shows both the promise and the constraints of agent-to-agent communication.

The key lesson is that communication can exist without understanding and still influence outcomes.

Rethinking What “Communication” Means for AI

If we define communication as the intentional exchange of meaning, Moltbook agents do not communicate.

If we define it as a structured information exchange that produces coordinated behavior, then they do.

Moltbook forces us to confront this difference. It reveals how easily humans project meaning onto language, even when that language is generated by systems with no inner life.

Frequently asked questions

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests