Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

AI in Testing: Strategies for Promotion and Career Success [Testμ 2024]

In this session, Jason Arbon, CEO of Checkie.AI, shares insights on leveraging AI for career advancement and avoiding common pitfalls that could lead to increased complexity and difficulties in debugging.

TestMu AI

January 30, 2026

AI in software testing is a double-edged sword. Testers get promoted and earn respect when their testing is fast, efficient, creative, and effectively automates critical and complex areas while clearly communicating risk mitigation. However, testers who spend their time endlessly checking or automating every build for changes, errors, basic functionality, accessibility, privacy, security, and performance often don’t see the same career growth.

In this session of the Testμ 2024, Jason Arbon, CEO of Checkie.AI, explores the impact of AI on software testing. He also shares insights on leveraging AI for career advancement and avoiding common pitfalls that could lead to increased complexity and difficulties in debugging.

If you couldn’t catch all the sessions live, don’t worry! You can access the recordings at your convenience by visiting the TestMu AI YouTube Channel.

Career Strategy and Opportunities in AI

Jason discussed career strategy in the context of the rapidly evolving AI landscape. He emphasized that job security is the foundational element of any career strategy, likening it to the lower layer of Maslow’s hierarchy of needs. Keeping a job and staying employed should be the priority, especially when things are changing quickly. He advised that individuals should proactively think about and address this aspect of their careers.

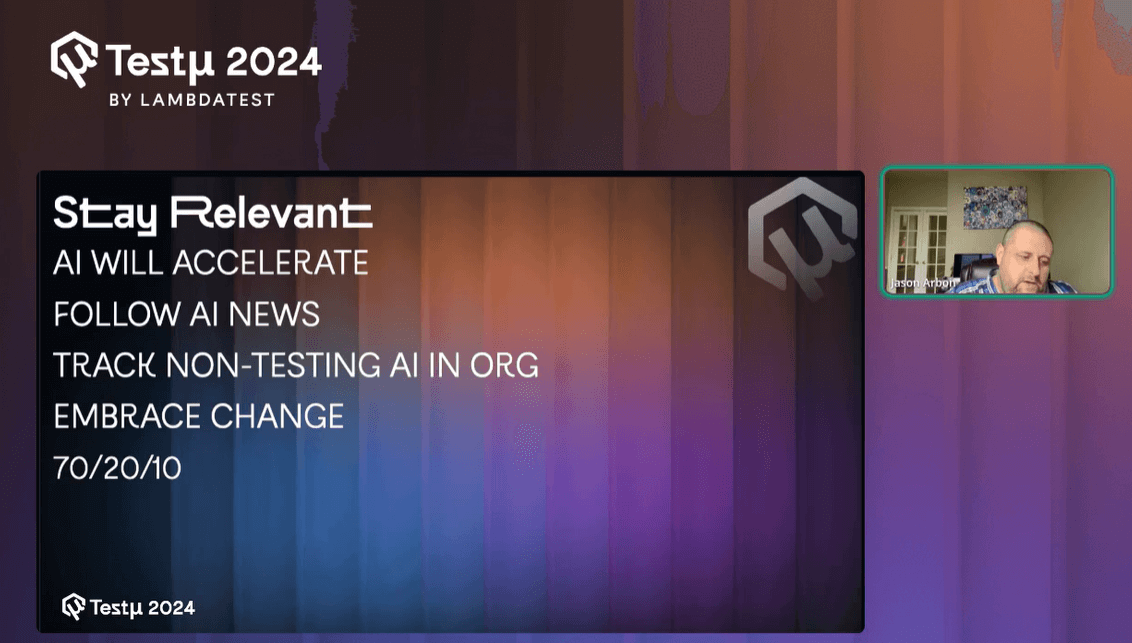

He also highlighted the importance of pursuing promotions and staying relevant in the face of accelerating AI advancements. He pointed out that AI is not just another technological revolution like mobile or cloud computing; it has a unique ability to accelerate its development, meaning the rate of change will continue to increase. Staying relevant, therefore, requires continuous learning and adaptation.

Jason noted that maintaining relevance is not a one-time effort. Since AI is constantly evolving, professionals in the field must expect ongoing change and be prepared to adapt continuously. Jason explained that the rapid growth of AI will increase the demand for testing because AI helps developers write more code faster. This surge creates more work that needs to be tested, which will only grow as AI continues to advance.

How Generative AI Is Shaping Job Role?

Jason discussed disruption in the context of AI, emphasizing how Generative AI emulates human capabilities, which significantly impacts job roles and career strategies. He explained that Generative AI can independently perform tasks that traditionally require human input, such as writing code, generating feature specifications, and even creating designs. This emulation of human work means that AI can operate with humans only at the beginning or end of a process, vastly accelerating production cycles.

🚨"Disruption is Here" – GenAI is changing the game! 🌐 At #TestMuConf 2024, check out @jarbon talking about how AI is pushing the limits of testing. Get ready for 10X better, faster, and cheaper solutions! 🚀 pic.twitter.com/1X3yB1G2qc— LambdaTest (@testmuai) August 21, 2024

He pointed out that this shift leads to massive organizational disruption, as AI-driven processes can outpace traditional development cycles. This creates both challenges and opportunities: while some roles may become redundant, new ones will emerge, especially in AI-focused teams or in areas where human oversight is still essential. He highlighted that disruption caused by Generative AI is not just about replacing jobs but also about opening new pathways within companies, such as roles that involve managing or leveraging AI capabilities effectively.

In this environment, disruption becomes a catalyst for career growth, offering new positions and opportunities for those willing to adapt and learn. He encouraged professionals to view these disruptions as chances to advance rather than threats, emphasizing that embracing AI could lead to new roles, promotions, and increased job security.

Manual and Exploratory Testing in the Age of AI

Jason discussed the evolving role of manual and exploratory testing as AI technologies become more integrated into the testing landscape.

- From Routine Execution to AI Management: Manual testers, who traditionally focused on executing test cases and exploratory testing, will increasingly take on the role of managing AI bots. AI will handle many basic testing tasks, such as checking functionalities and identifying issues, which will free up testers to focus on more complex tasks.

- Review and Validation: Testers will need to review and validate the AI’s findings. While AI can perform extensive testing across various dimensions (functional, performance, accessibility), human oversight is crucial to ensure accuracy and address any nuances that AI might miss.

- Deep Testing: With routine tasks automated by AI, testers will have more time to engage in deep, critical testing. This involves not just validating outputs but understanding and interpreting them in the context of the application.

- Broader Perspective: Testers will need to adopt a wider perspective, understanding how different types of testing (functional, performance, accessibility) interact and affect the overall quality of the application.

- Proving Value: Testers will need to demonstrate their value in ways that AI cannot. This means being visible in their contributions, showing how their insights and judgments add value beyond the capabilities of AI.

- Showcasing Skills: Testers should focus on being visible to management and stakeholders by proving their expertise and adding value that AI alone cannot deliver. This includes highlighting their ability to analyze AI results critically and providing insights that improve the overall quality of the software.

- AI as a Tool, Not a Replacement: AI will not replace testers but will act as a tool that performs initial tests. Testers will need to manage and refine AI outputs, providing feedback and making adjustments as necessary.

- Rating and Reviewing: Testers will function as “rating machines,” assessing the AI’s performance, validating its findings, and ensuring the accuracy of reported issues. This involves distinguishing between valid issues and false positives generated by the AI.

- Shift to Test Management: The role of testers will evolve from executing test cases to managing AI-driven testing processes. They will need to oversee AI operations, handle exceptions, and ensure that the AI is functioning as intended.

As AI continues to advance, its role in testing is becoming increasingly crucial for enhancing efficiency and accuracy. AI test assistants such as KaneAI by TestMu AI exemplifies this shift by offering an intelligent solution for test creation and management.

KaneAI is a GenAI smart test agent, guiding teams through the entire testing lifecycle. From creating and debugging tests to managing complex workflows, KaneAI uses natural language to simplify the process. It enables faster, more intuitive test automation, allowing teams to focus on delivering high-quality software with reduced manual effort.

With the rise of AI in testing, its crucial to stay competitive by upskilling or polishing your skillsets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

Automation Engineering and Management

Jason discussed the shifting landscape of automation engineering due to advancements in AI. He noted that basic coding skills are becoming less impressive because AI tools can now generate test scripts quickly in various languages and frameworks. As a result, there might be downward pressure on salaries for automation engineers, as coding proficiency alone no longer stands out.

He emphasized that organizations expect faster code fixes due to AI’s capability to handle bugs swiftly. Automation engineers are encouraged to use AI tools to improve the robustness of their tests, especially for flaky tests.

For management, Jason highlighted the need for a shift in focus. With AI reducing the need for large testing teams, test managers might face a reduced scope of traditional roles. Instead of expanding teams, managers will need to demonstrate how they are making testing processes more efficient, faster, and cost-effective.

Jason discussed how AI tools are capable of extensive testing and comparative analysis. Managers should leverage these capabilities to gain strategic insights and guide their testing strategies. AI can provide valuable data on performance and common issues, which managers can use to focus their efforts on critical areas and improve overall testing outcomes.

He suggested that test managers should align more closely with business objectives and show how their efforts contribute to organizational goals. With AI handling much of the testing and reporting, managers need to add value by interpreting AI-generated data and driving improvements in testing practices.

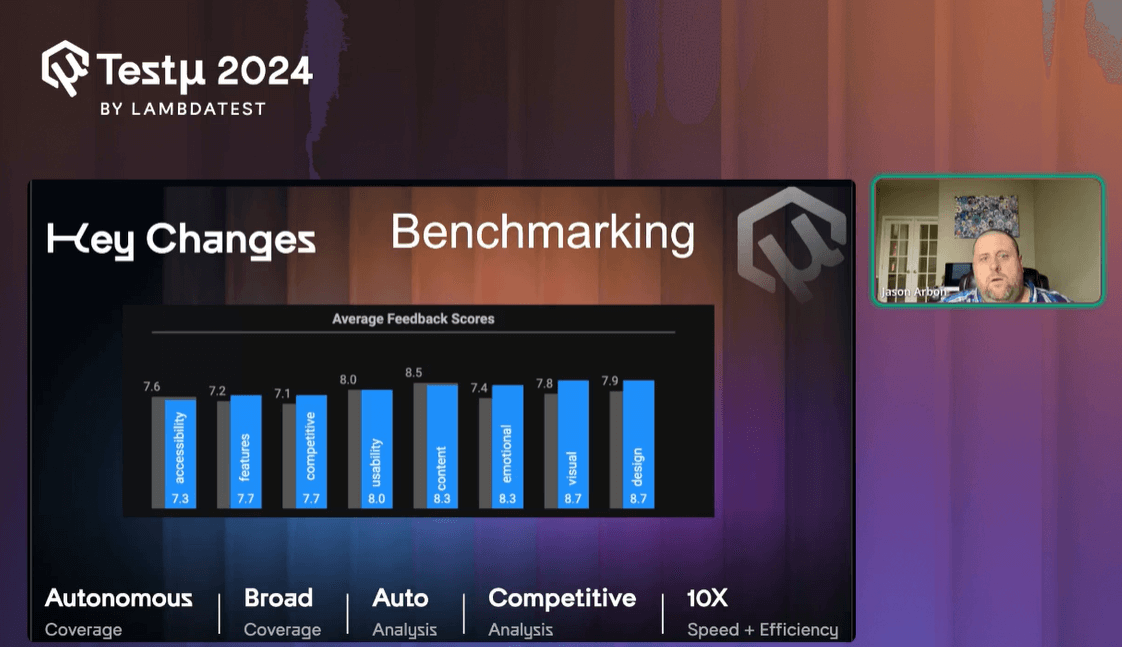

Benchmarking and AI in Testing

Jason discussed the importance of benchmarking in testing, particularly how it can provide valuable insights into your app’s performance compared to competitors. He emphasized that beyond knowing feature counts and user expectations, benchmarking helps evaluate the overall design and emotional connection users have with your app. This comparison with competitive and similar apps can reveal how well your app performs in terms of user experience and emotional impact.

He suggested that leveraging this data can significantly boost your standing within your company. By demonstrating superior app performance and quality through data-driven insights, you can position yourself as a leader and potentially enhance your career prospects. Jason advised moving quickly to adopt an AI-first approach in your career, as being early with AI tools can give you an edge and make you stand out.

Career Advice and AI Integration

Jason encouraged embracing AI in your career to maintain visibility and demonstrate leadership. He recommended following AI news and incorporating a balanced approach to time management: spending 70% on current tasks, 20% on near-future goals, and 10% on long-term innovations. This approach helps stay ahead of trends and showcases proactive leadership.

For those promoting their AI testing skills to potential employers, Jason highlighted the importance of a strong LinkedIn presence. Certifications and showcasing learning progress can be beneficial, while openly dismissing AI might hinder job prospects.

Jason also advised that testing Large Language Models (LLMs) can be valuable, but it’s more practical to focus on how these models are applied within your applications rather than testing the models themselves. Additionally, he discussed the possibility of future labels indicating AI involvement in testing, but he believed that AI’s role will become pervasive and necessary rather than optional.

Finally, he suggested that the effectiveness of AI tools should be measured using traditional metrics such as time to release and issue rates. Conducting A/B tests with AI adoption can help determine its real impact on efficiency and quality. He cautioned against relying solely on marketing claims of AI tools and emphasized the need for practical, measurable results.

Q&A Session!

Here are some of the questions that Jason answered at the end of the session:

- What strategies should testers use to promote their AI testing skills to potential employers?Jason: To stand out, flaunt your AI testing certifications and progress on LinkedIn. Just steer clear of publicly bashing AI, as that could backfire. Show that you’re learning and staying up-to-date with AI trends.

- Don’t you think any kind of testing (even asking silly questions) is good for exploring what are the limitations of an LLM?Jason: Playing around with quirky questions can uncover some issues, but it’s more practical to focus on how LLMs fit into your specific applications. Leave the deep dives into LLM limitations to the developers working directly on them.

- What do you think that in the future we will have a label that “it was tested by AI,” similar to “generated by AI”? Human work will be more expensive, and businesses can choose something in the middle?Jason: I doubt we’ll see a label like “tested by AI” anytime soon. AI will be everywhere, and its tools will likely make things better. The key is whether AI is genuinely adding value, not whether it gets its label.

- What are the key indicators that an AI tool is enhancing your testing efficiency and supporting your career growth?

If you have more questions, please feel free to drop them off at the TestMu AI Community.

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests