Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

On This Page

- About the Speaker

- Chapter 1: How It All Started?

- Chapter 2: Encountering Hurdles While Running the App

- Chapter 3: Development of Python Script to Overcome the Hurdles

- Chapter 4: Evolution of a Simple Python Script to a Valuable Solution

- Chapter 5: Realizing the Benefits of the Python Script

- Chapter 6: A Journey Towards Improvement Starts

- Time for Some Q&A

My Crafting Project Became Critical Infrastructure [Testμ 2023]

Learn from Elizabeth Zagroba's journey of turning a simple Python script into a game-changing solution for efficient deployment and testing workflows.

TestMu AI

January 27, 2026

Driven to madness by the normal workflow for testing her application, Elizabeth Zagroba wrote a small Python script in a couple of days. She took us on her own story of how a simple Python script became a game-changer for her team.As she goes through the different chapters of her journey, she shares how it evolved from solving her problem to becoming a vital part of their release process! As part of the session, she threw light on how good collaboration takes time and energy and how small things for one use can grow into bigger things with many uses!

About the Speaker

Elizabeth Zagroba is the Quality Lead at Mendix. She is an exploratory tester with an “extraordinary power of observation and categorization.” She currently serves as a co-organizer for the Friends of Good Software Conference (FroGS Conf) and is a program committee member for Agile Testing Days.

If you couldn’t catch all the sessions live, don’t worry! You can access the recordings at your convenience by visiting the TestMu AI YouTube Channel.

Chapter 1: How It All Started?

In 2019, Elizabeth was working on building a data hub catalog application for her team. She was working as a software tester. Her primary responsibility was to ensure the quality of the application through various testing approaches.

Elizabeth followed a process that involved running the application on her local machine. Her goals during this testing phase were to run automated tests that automatically assess the application’s functionality and conduct exploratory testing by manually interacting with the application to identify potential issues.

The actual process involved using virtualization technology. Elizabeth used a tool called “Parallels” to create a virtualized Windows environment (VM) on her macOS computer. Within this VM, she would open the Integrated Development Environment (IDE) to run the application. This approach allowed her to test the application in an environment that closely resembled the intended production environment.

Chapter 2: Encountering Hurdles While Running the App

While running the application locally on her machine, Elizabeth discovered that the VM that she was using to run the application started experiencing problems. When she attempted to run the application within the VM, it would crash, leading to a series of crashes that affected both the VM and her macOS system. This situation made it impossible for her to effectively test the application locally, which was a significant impediment to her testing tasks.

However, her team had a hosted cloud solution where they could build and deploy packages for testing purposes. This solution provided a test environment where Elizabeth could deploy the application package and perform tests without running it locally. The process involved utilizing publicly documented build and deploy APIs through their cloud UI web application.

However, the challenge with this cloud UI approach was that each process step required waiting for a loading spinner on the page. It took about eight minutes to deploy their application if followed diligently and immediately. However, Elizabeth found it challenging to keep track of these waiting periods. She mentioned that she often became distracted by checking her emails, reading messages, or browsing social media. As a result, she lost valuable time and productivity while waiting for the loading spinner to complete, leading to inefficiencies in her testing workflow.

This led to her ability to carry out her testing responsibilities but also created frustration and hindered her productivity. It was a critical issue because running the application locally was a crucial step in testing to ensure its quality and identify potential issues before deployment.

Chapter 3: Development of Python Script to Overcome the Hurdles

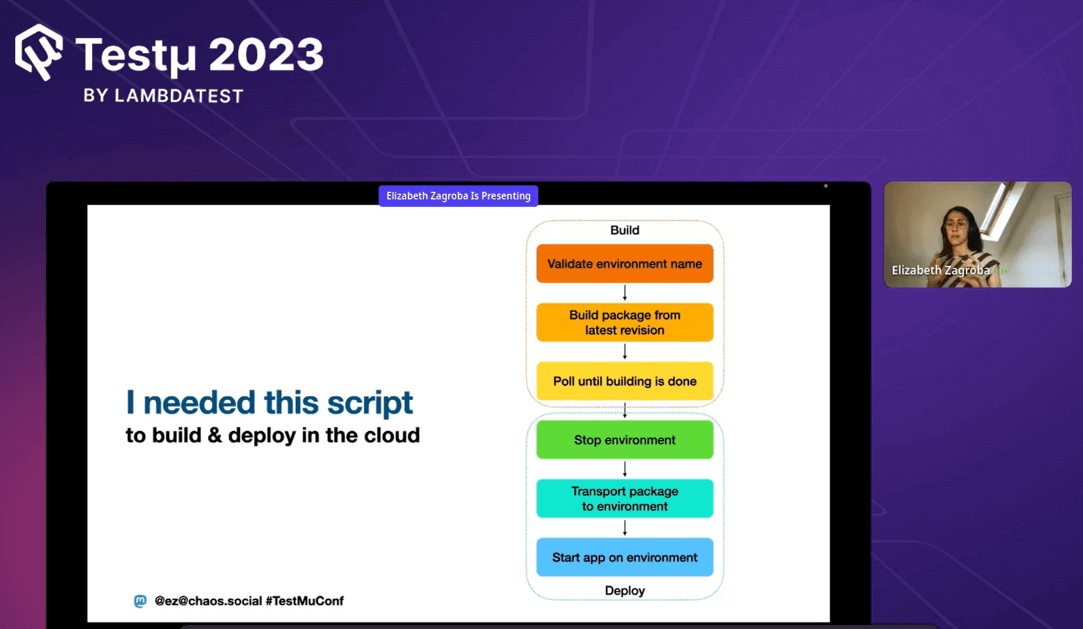

Elizabeth developed a Python script that automated the deployment process of the “data hub catalog application.” This script allowed her to bypass the issues with her virtualized Windows environment (VM) and significantly streamlined the testing workflow. Here’s how the solution worked:

- Python Script Development: Elizabeth decided to write a Python script that would call the company’s publicly documented build and deploy APIs for their cloud solution. These APIs allowed her to create and deploy a package to a hosted environment without relying on the problematic VM setup.

- Automated Deployment: The Python script would automate the entire deployment process, from building the package to deploying it to the cloud environment. It would interact with the APIs to perform the necessary tasks, reducing manual intervention and eliminating the need to watch loading spinners or wait for manual steps to complete.

- Command Line Interface: Elizabeth designed the script to be run from the command line, which provided a simple and efficient way to trigger the deployment process. This allowed her to execute the script with a single command, making it easy to use and incorporate into her testing workflow.

Chapter 4: Evolution of a Simple Python Script to a Valuable Solution

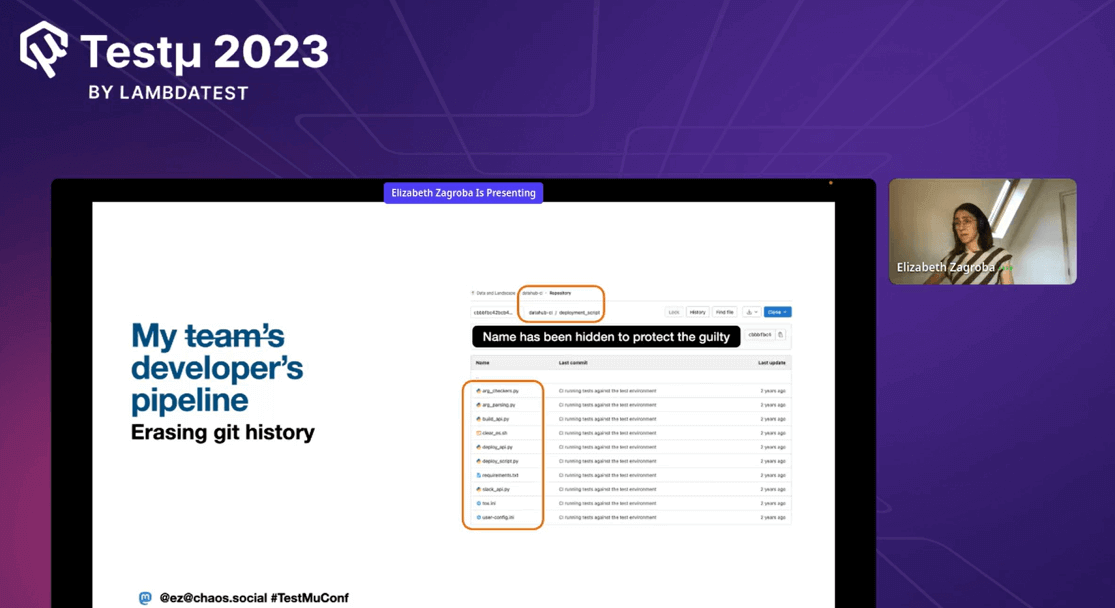

Elizabeth’s journey began with a background in code editing rather than creating new repositories. She aimed to develop a clean and readable codebase, which led her to initiate a project during the company’s “crafting days.” She aimed to automate the data hub catalog application deployment and fasten automated testing.

Once the script was ready, Elizabeth shared it with other teams, expecting it to be valuable for them. However, her developers didn’t initially find it relevant because they didn’t use the test environment.

To make it more complex, corporate requirements introduced a shift, necessitating the integration of security scans into the script. The security scans were aimed at identifying vulnerabilities and third-party library issues. They used tools like “sneak” and “barcode” to perform these scans. A developer from her team incorporated the script into a GitLab pipeline, automating security checks, tests, and deployment. The developer reused a function used in Elizabeth’s Python script for building packages. They realized they could incorporate test execution into the pipeline, which was a frequent request from Elizabeth.

While other teams considered building new apps for similar functionalities, Elizabeth’s script emerged as a lightweight alternative. However, impatience led one developer to make extensive changes that disrupted the script’s functionality, emphasizing the need to maintain code quality.

While all this was happening, Elizabeth’s role transitioned from a manager to a quality lead, providing her more time to address the script’s evolution. She had to decide whether to integrate the impatient developer’s changes, considering the script’s intended purpose and boundaries.

Understanding the critical role of testing, Elizabeth wrote comprehensive tests to ensure the script’s stability. She recognized that maintaining code quality through testing was essential as the script became integral to release processes. However, Elizabeth avoided merging features that deviated from the script’s primary purpose. Her script grew from a personal project into a solution embraced by multiple teams and solving their challenges.

Chapter 5: Realizing the Benefits of the Python Script

The Python script that Elizabeth developed became a game-changer by solving her immediate problem of deploying the application reliably and efficiently. Some of the benefits that she and her team realized were:

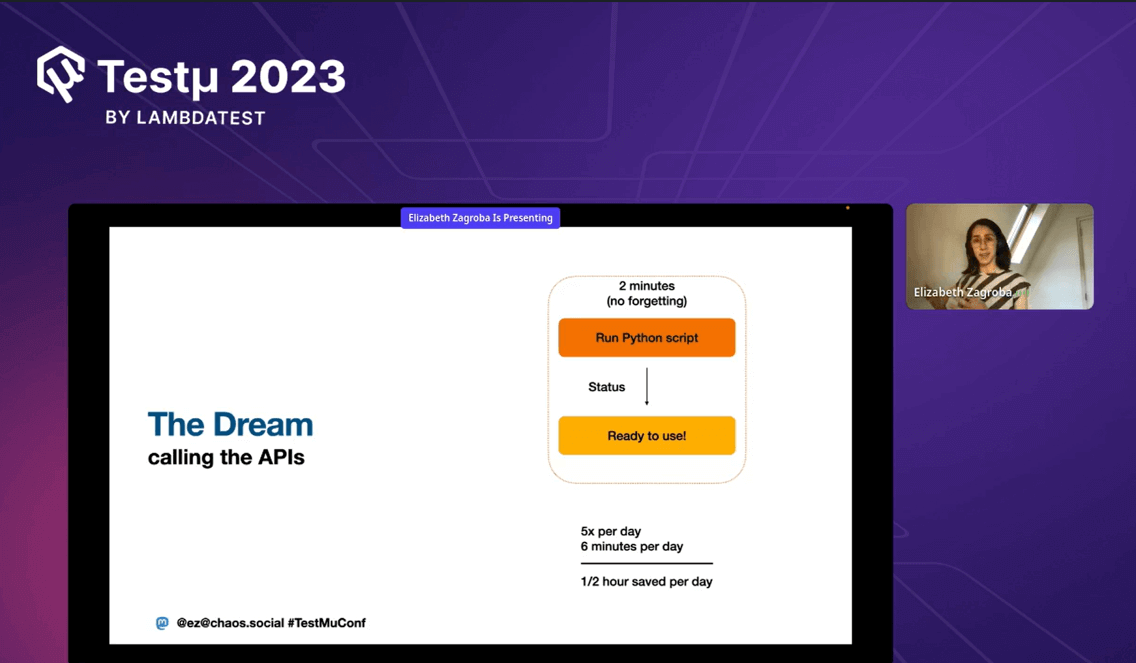

- Time Savings: The Python script drastically reduced the deployment time compared to using the company’s cloud UI. Instead of waiting to load spinners, Elizabeth could trigger the deployment process and continue her work without interruptions. This resulted in significant time savings, estimated at around 30 minutes daily.

- Efficiency: By automating the deployment process, the script improved efficiency and eliminated the need for manual steps. Elizabeth no longer had to watch loading spinners or switch between different tasks while waiting for the deployment to complete.

- Consistency in Deployment: The script ensured consistency in the deployment process. Regardless of who was running the deployment, the script would execute the same steps each time, reducing the risk of human error and ensuring a reliable deployment process.

- Stress Reduction: The frustration of dealing with crashing VMs and waiting for manual processes was eliminated. The script reduced stress by providing a more reliable and predictable way to deploy the application.

- Improved Productivity: With the script handling the deployment process, Elizabeth could focus more on her testing tasks and explore the application for issues and improvements. This enhanced her effectiveness as a tester.

- Ability to Scale: As other teams within the company faced similar challenges with deployment, Elizabeth’s script became a valuable solution that other teams adopted. This showcased the scalability and usefulness of her initial solution.

Chapter 6: A Journey Towards Improvement Starts

As the script continued to expand and gain significance, Elizabeth recognized the need to make it sustainable. Instead of receiving last-minute requests for changes that were difficult to comprehend, she sought a more collaborative approach. She decided to engage with colleagues from the outset to grasp the underlying problems together and embark on a shared journey to solve them.

With renewed energy and time, Elizabeth began asking pertinent questions to better understand proposed changes, goals, and existing code within the repository. Throughout the summer and into the fall of 2022, she made substantial progress in enhancing the repository, including adding tests for various script functionalities. Though a few tasks remain on her list, the outcomes are evident: prompt response to script failures and the ability to effectively guide contributors in proposing changes.

Concluding the session, Elizabeth recalled her journey from writing the initial Python script and sharing it with her team to accepting a crucial merge request from an impatient developer. She mentioned that she engaged in roles beyond her initial intentions after this incident. But her story inspired everyone to learn the value of adaptability and the unexpected growth that can arise from collaborative development efforts.

Time for Some Q&A

- Before crafting a project, before turning into critical infrastructure, how well have you planned and executed the crafting project, and what are the impact and professional challenges you’re working on?

Elizabeth: Before crafting projects became crucial for me and my team’s work, the planning and execution were about making something useful and showing it to others. The team was supposed to share what we created, but not everyone did.

As for the impact and challenges, while I couldn’t point to a specific problem solved by a crafting project, sharing those projects made more people recognize and know about my work. Talking about those projects and sharing my code made others aware of what she did in the company.

- Can you shed more light on how you manage deadlines for smaller parts or modules of your project in your overall journey?

Answer: I didn’t manage strict deadlines for different project parts. It was more about working on it when I had time. Sometimes, I get interrupted by urgent tasks. I kept project management away from my main work-tracking tool so that I could work comfortably without too much pressure. This approach helps me work on the project over a long period without rushing. There might be more structure in the future, but as of now, I enjoy the flexibility.

- What role did writing tests play in reshaping and refactoring the code base?

Answer: Writing tests played a big role in improving the code and my motivation towards maintaining the project. Tests allow me to make changes and to stay confident that they worked. Testing is crucial – don’t skip it unless you enjoy many manual checks. Writing tests forced me to organize the code better, breaking it into smaller pieces. This project likely saved me and my team a lot of time, potentially cutting down on work hours each week. The time saved could translate to significant cost reduction, although I haven’t measured it precisely.

Got more questions? Drop them on the TestMu AI Community.

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests