Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

‘AI’levating Tester Experience [Testμ 2024]

In this session, Rituparna Ghosh, Vice President & Head of Quality Engineering & Testing at Wipro, explores how GenAI transforms software development and its impact on software testers.

TestMu AI

January 30, 2026

Organizations are buzzing with excitement over the innovative potential of Generative AI, especially in IT. Software development is a prime area for starting with GenAI, and tools like GitHub Copilot can really shake up and streamline code generation. This boost in coding speed will push testers into a more crucial role.

With disruptive tech like GenAI leaving little room for error, testers need to be more productive and effective than ever. So, how do we achieve that? By enhancing the tester experience! Improving this aspect can be key to successfully implementing these new technologies.

In this session, Rituparna Ghosh, Vice President & Head of Quality Engineering & Testing at Wipro, explores how GenAI transforms software development and its impact on software testers.

If you couldn’t catch all the sessions live, don’t worry! You can access the recordings at your convenience by visiting the TestMu AI YouTube Channel.

Redefining Tester Experience and Role of GenAI

Historically, the focus in technology has been on User Experience (UX) and Developer Experience (DevX). However, Ritu brought to light the often-overlooked aspect of Tester Experience (TX).

She stressed that just as UX and DevX are crucial for developers and users, TX should also be a priority. Making testers’ roles more efficient is vital for maintaining high standards in software quality.

GenAI is emerging as a transformative force in testing, not as a replacement for human testers but as an enhancement tool. Ritu explained that while GenAI can automate and accelerate various aspects of testing, it is meant to complement human skills rather than replace them. The focus should be on how AI can aid testers in their work, making their tasks more manageable and productive.

Effects of Generative AI on Testing Practices

Ritu explored the profound effects of Generative AI (GenAI) on testing practices. Her insights offer a detailed look at how GenAI is reshaping the field and what this means for testers.

Rituparna discusses the future of Quality Assurance with Generative AI, emphasizing that testing is a necessity, and AI is the key to enhancing it. pic.twitter.com/O7prWaj0yJ— LambdaTest (@testmuai) August 22, 2024

Here’s a breakdown of the key effects Ritu highlighted:

- Enhanced Efficiency and Automation: Generative AI is revolutionizing testing by automating various aspects of the testing process. Ritu emphasized that GenAI tools can dramatically reduce the effort required for testing tasks.

- Augmentation Rather Than Replacement: A critical point Ritu made is that GenAI is designed to augment rather than replace human testers. While AI tools can handle repetitive and mundane tasks, they are not a substitute for human judgment and expertise.

- Addressing Bias and Hallucination: Ritu discussed the challenges associated with AI bias and hallucination. Generative AI models can sometimes produce biased or incorrect outputs based on the data they were trained on.

- Evolution of Testing Roles: As GenAI tools become more prevalent, new roles and responsibilities within testing are emerging. Ritu highlighted that testers will need to adapt to these changes by developing new skills and approaches.

- Increasing Complexity in Testing Strategies: The integration of GenAI into testing practices necessitates more sophisticated testing strategies. Ritu noted that with the proliferation of AI-generated code and solutions, testers will need to create and implement more comprehensive strategies to evaluate these tools effectively.

- Implications for Code Generation: GenAI’s impact on code generation is significant. Ritu pointed out that while AI can generate code, it often requires extensive modification and contextualization.

- Transforming Testing Workflows: Generative AI is set to transform traditional testing workflows. Ritu discussed how AI can automate supplementary tasks that often consume a significant portion of a tester’s time.

- Business Impact and Faster Releases: The adoption of GenAI in testing can have a substantial impact on business operations. According to Ritu, the enhanced efficiency and automation provided by AI can lead to faster release cycles.

For example, test case creation and execution, which traditionally might take days, can now be completed more swiftly with the help of AI. This increased efficiency is leading to higher levels of automation, which in turn accelerates the overall testing cycle.

Testers are still essential for interpreting results, addressing complex issues, and ensuring that AI-generated outputs meet the necessary quality standards. The role of testers is evolving to focus more on strategic oversight and less on routine tasks.

For instance, early AI models have shown biases in certain applications, such as resume screening, where they favored certain demographics over others. Ritu stressed the importance of rigorous training and validation to mitigate these issues and ensure that AI tools provide fair and accurate results.

Roles such as context curators and prompt engineers are becoming increasingly relevant. These professionals will focus on optimizing the use of GenAI, ensuring that it is used effectively within different testing contexts and for various personas.

This includes adapting to new models, understanding their limitations, and ensuring thorough testing coverage.

The initial output from AI tools may be boilerplate code that needs to be tailored to fit specific requirements. Testers play a crucial role in validating and refining this code to ensure it functions correctly in the intended environment.

For instance, resolving issues with CI/CD pipelines or integrating new testing tools can be streamlined through AI automation. This shift will allow testers to focus more on core activities such as designing test cases and strategizing test coverage.

Businesses can either accelerate their release schedules or include more features in each release. This flexibility can provide a competitive edge and improve overall product quality.

How GenAI Transforms the Global Landscape?

Ritu explored the profound impact of the GenAI on the modern workspace. One of the key changes is the emergence of new work environments powered by GenAI. This includes enhanced collaboration through advanced tools and platforms that streamline communication and project management.

Generative AI will soon create personalized workspaces, enhancing existing roles and introducing new ones like context curators and prompt engineers. We’re on the brink of moving from Horizon 1 to Horizon 2, possibly within 2 years! pic.twitter.com/fF49DXflnt— LambdaTest (@testmuai) August 22, 2024

Additionally, the rise of AI-driven virtual assistants will play a crucial role in handling routine tasks such as scheduling and administrative duties, thereby boosting overall workplace efficiency.

Ritu also emphasized how GenAI will augment existing roles rather than replace them. By integrating AI tools, professionals will see an enhancement in their skills and capabilities. For instance, AI can assist with complex data analysis and provide actionable insights, allowing employees to focus on strategic and creative tasks that add more value to their roles.

This shift will lead to increased productivity as routine and repetitive tasks are automated, enabling workers to use their time more effectively and make a greater impact in their areas of expertise.

Similarly, AI test assistants such as KaneAI by TestMu AI exemplifies this transformation in the field of test automation.

KaneAI, a GenAI native QA Agent-as-a-Service, uses natural language to simplify the process, from creating and debugging tests to managing complex workflows. It enables faster, more intuitive test automation, allowing teams to focus on delivering high-quality software with reduced manual effort.

With the rise of AI in testing, its crucial to stay competitive by upskilling or polishing your skillsets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

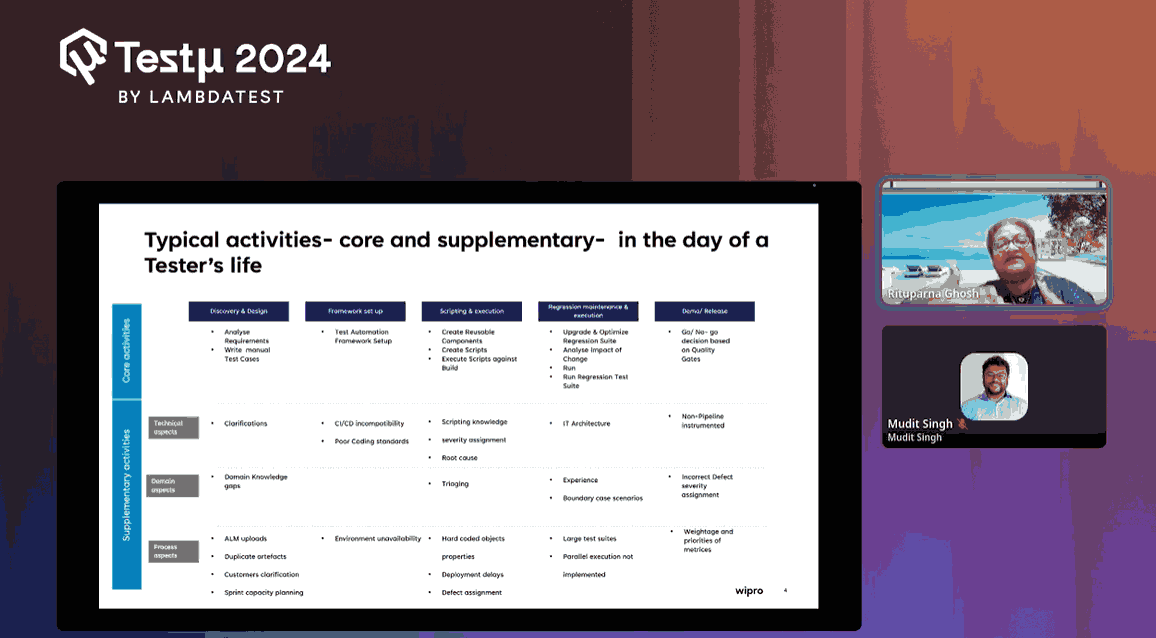

Core and Supplementary Activities of a Tester in a Day

Ritu then went ahead and highlighted the core activities of a tester in a day, which are as follows:

- Discovery and Design: This initial phase involves understanding the requirements and acceptance criteria of the project. Testers design test cases based on these criteria, aiming to cover various scenarios and ensure comprehensive testing.

- Framework Setup: Setting up the testing framework is crucial for effective test execution. This includes configuring the necessary tools, environments, and libraries to support automated and manual testing efforts.

- Scripting and Execution: Testers write and execute test scripts based on the designed test cases. This phase involves running tests, identifying issues, and logging defects. Efficient scripting and execution are essential for thorough and accurate testing.

- Regression Maintenance: Maintaining regression tests is vital for ensuring that new changes or features do not adversely affect existing functionality. Testers update and manage regression test suites to keep them relevant and effective.

- Demo/Release: This activity involves preparing for and participating in product demos or releases. Testers ensure that the software meets quality standards before it is presented to stakeholders or deployed to production.

Ritu explained that many supplementary tasks in testing, such as dealing with incompatible CI/CD engines or instrumenting pipelines, can be automated or augmented by GenAI. This will allow testers to focus more on core activities like test design and strategy, potentially reducing the effort required by 40-50% and improving automation throughput.

Why Are Testers Today Not Productive?

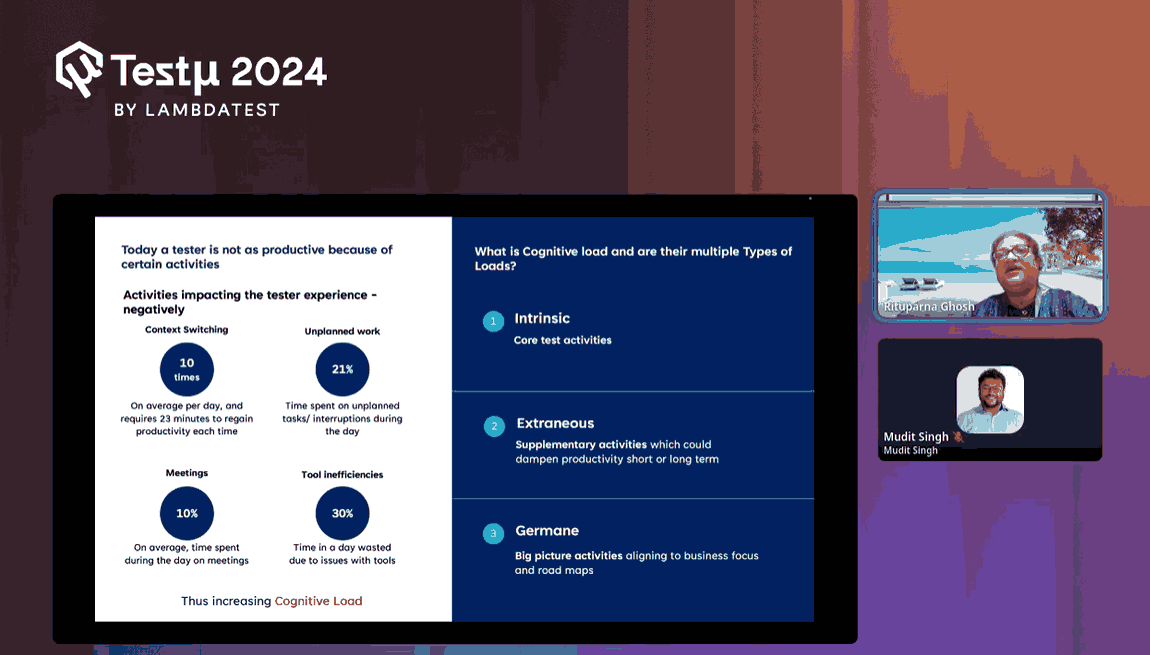

Ritu highlighted that several factors often hamper a tester’s productivity today:

- Context Switching: Testers frequently switch between different tasks, which disrupts focus and makes it hard to maintain momentum. This constant shifting between activities creates inefficiencies and reduces overall productivity.

- Unplanned Work: Testers are often pulled into unplanned tasks and emergencies that divert their attention from their primary responsibilities. This reactive approach leads to fragmented work schedules and incomplete tasks.

- Meetings: A significant portion of a tester’s day is consumed by meetings, many of which are not directly aligned with their core testing activities. These meetings interrupt workflow and reduce the time available for actual testing.

- Tool Inefficiencies: Inefficient tools and processes add to the workload. Testers often deal with outdated or cumbersome tools that slow down their testing processes, making it difficult to complete tasks efficiently.

These factors collectively contribute to a less productive work environment for testers, preventing them from focusing on high-value testing activities.

How GenAI Improves Tester Productivity?

Ritu explained that during their testing process, they explored ways to drastically reduce the time required to complete a user story with well-defined acceptance criteria—from two days down to just two hours.

Initially, the focus was on web applications, yielding promising results. The team then applied the same approach to a Salesforce (SFDC) scenario to assess costs. This experiment, which was presented to a client where the solution is currently being implemented, provided insightful outcomes.

Through these experiments, Ritu’s team consistently observed three types of test cases:

- Common Test Cases: These are test cases identified by both human testers and the generative AI engine.

- Biased or Inaccurate Test Cases: These test cases vary in accuracy and are less reliable.

- Unique Test Cases: These are often overlooked by human testers but identified by the AI engine, enhancing overall test coverage.

Ritu noted that the accuracy of the AI-generated test cases varied between 70% to 90%, occasionally reaching as high as 93-94% when user stories and acceptance criteria were well-defined. In the SFDC use case, the AI-generated 179 test cases, many of which had not been considered by human testers. What took human testers one sprint or 10 days to complete, the AI achieved in significantly less time.

Productivity Challenges:

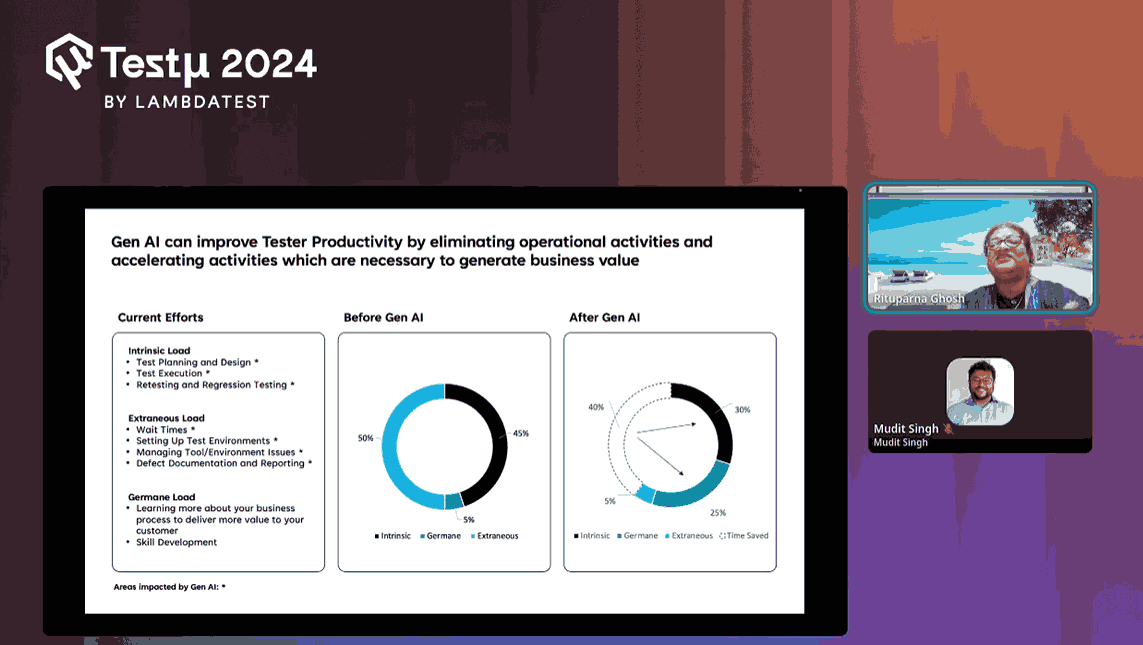

Ritu highlighted that testers’ productivity is often hindered by factors like context switching, unplanned work, meetings, and tool inefficiencies, all of which increase cognitive load. Cognitive load refers to the amount of information the working memory can handle at a given time and the effort required to process and retain that information. When cognitive load becomes excessive, it leads to fatigue, errors, and decreased productivity.

Ritu broke down cognitive load into three categories:

- Intrinsic Load: This relates to core test activities integral to the tester’s role. Mastering these tasks through practice builds muscle memory, making the tasks easier to perform.

- Extraneous Load: This involves supplementary activities that are not directly tied to core testing responsibilities, such as meetings and administrative tasks. Reducing this load with generative AI allows testers to focus on essential tasks.

- Germane Load: This load involves the effort put into learning and integrating new knowledge into long-term memory. It’s about gaining deeper insights into business processes or understanding the project at a strategic level.

According to Ritu, before implementing generative AI, testers typically spent about 45% of their time on intrinsic tasks, 50% on extraneous tasks, and only a small fraction on germane activities. After implementation, testers can better redirect their efforts towards honing skills and mastering their craft, enhancing their overall experience and productivity.

Ritu concluded by mentioning that Wipro and TestMu AI are collaborating to build an intelligent quality ecosystem. She encouraged the audience to reach out for more information on how these generative AI solutions can enhance testing processes and improve overall organizational performance.

If you have any questions, please feel free to drop them off at the TestMu AI Community.

Did you find this page helpful?

More Related Hubs

TestMu AI forEnterprise

Get access to solutions built on Enterprise

grade security, privacy, & compliance

- Advanced access controls

- Advanced data retention rules

- Advanced Local Testing

- Premium Support options

- Early access to beta features

- Private Slack Channel

- Unlimited Manual Accessibility DevTools Tests